Introduction

Cybersecurity threats have reached unprecedented levels. According to a 2024 report by Statista, global cybercrime damages are projected to exceed $13.8 trillion annually by 2025, with attacks occurring every 11 seconds. Traditional security tools—firewalls, signature-based detection systems, and manual monitoring—can no longer keep pace with the volume and sophistication of modern threats.

Artificial Intelligence (AI) is fundamentally changing how organizations defend themselves. Unlike legacy systems that rely on known attack signatures, AI-powered solutions can detect emerging threats in real time, respond automatically to incidents, and continuously adapt to evolving attacker tactics. However, the reality is more nuanced than vendor marketing suggests. Learn more about what artificial intelligence actually is.

The AI cybersecurity market grew from $23.12 billion in 2024 to $28.51 billion in 2025, with projections climbing to $136 billion by 2032. While every major cybersecurity vendor now touts AI-powered threat detection, predictive analytics, and autonomous response systems, this article examines what AI actually delivers, where it falls short, and which organizations genuinely benefit from the investment.

What AI in Cybersecurity Actually Means

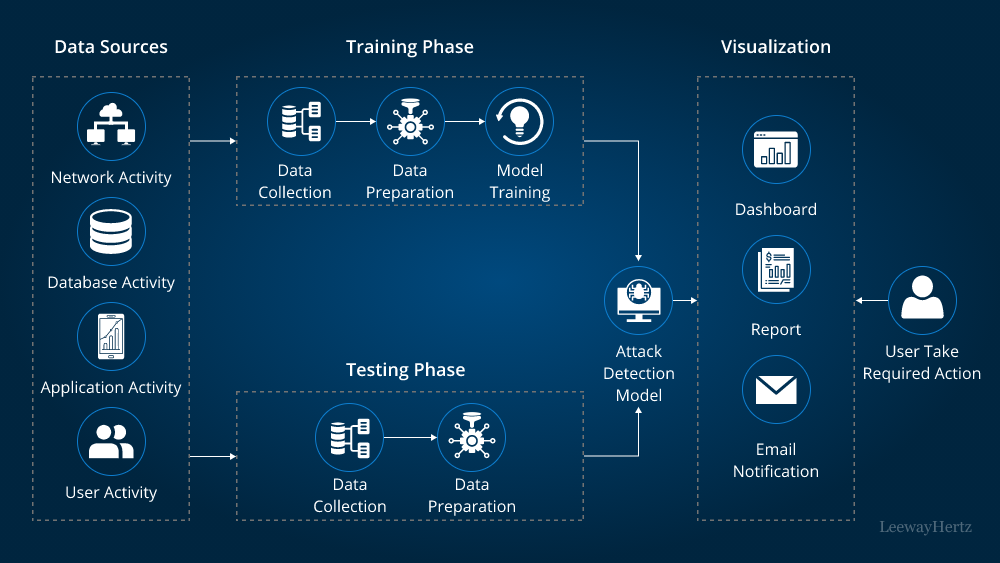

AI in cybersecurity refers to machine learning algorithms that analyze network traffic, user behavior, and system activities to detect anomalies indicating potential threats. Unlike signature-based detection (which only catches known malware), AI systems learn patterns of normal behavior and flag deviations. A user suddenly downloading gigabytes of data at 3 AM triggers alerts not because it matches a malware signature, but because it deviates from that user's established pattern.

The technology relies primarily on three approaches:

- Machine Learning: Models that train on historical attack data to recognize threat patterns and improve continuously with new data. Learn how machine learning works in this comprehensive guide.

- Behavioral Analytics: Systems that establish baselines for users, devices, and network traffic, then identify outliers and anomalies.

- Natural Language Processing: Analysis of threat intelligence feeds, security logs, and dark web chatter to identify emerging attack vectors.Explore natural language processing and its applications.

The distinction between AI, machine learning, and deep learning matters when evaluating vendor claims. True machine learning systems improve with exposure to new data, while many "AI-powered" security tools simply use complex rule sets with no learning capability.Understand the differences between AI, machine learning, and deep learning.

Figure 1: Data Sources, Training Phase, and Visualization in AI threat detection systems

Research from 2024 shows that machine learning systems now recognize 98% of phishing attempts through real-time anomaly detection. The overall threat monitoring capacity has expanded to over 150 billion events per day. Bug discovery speed has increased roughly 11 times compared with human testing.

But these capabilities come with caveats vendors rarely emphasize upfront. The systems need vast amounts of quality data to function properly. They generate false positives that require human investigation. Most critically, they work best as augmentation for skilled security teams, not replacement for them.

Why AI Matters in Cybersecurity (And Its Limitations)

Handling Massive Data Volumes

Traditional security operations centres (SOCs) are drowning in data. According to JumpCloud research, organizations receive an average of 11,067 security alerts daily, yet only about 5% are investigated due to resource constraints. This creates massive blind spots.

AI solves this through efficient data processing:

- Volume Processing: AI can analyze millions of events per second across networks, endpoints, and cloud systems. Microsoft Defender processes over 9.6 trillion signals daily to detect threats.

- Alert Prioritization: Rather than treating all alerts equally, AI ranks threats by severity and business impact, allowing security teams to focus on what matters most.

Adapting to Evolving Threats

Cybercriminals continuously develop new attack methods, malware variants, and social engineering tactics. Traditional rule-based systems struggle because they can only detect threats matching known signatures.

AI overcomes this limitation through pattern recognition that identifies threats even if they differ slightly from known strains. According to Gartner research, AI-powered systems can detect previously unknown (zero-day) vulnerabilities by identifying abnormal code execution patterns and suspicious system behaviour.

Real example: When the WannaCry ransomware emerged in 2017, organizations with legacy defenses were devastated. However, AI-powered systems from vendors like CrowdStrike and Microsoft identified the threat within minutes by recognizing its infection patterns and file encryption behavior, despite it being previously unknown.

Reducing Human Error and Burnout

Cybersecurity teams are stretched thin. The (ISC)² 2024 Cybersecurity Workforce Study found that 51% of security professionals work more than 40 hours per week, and burnout is common. This fatigue leads to missed alerts and delayed responses.

AI reduces human burden by automating routine tasks, maintaining consistent performance regardless of time of day, and providing contextualized recommendations that allow analysts to make faster, better-informed decisions.

How the Leading Platforms Actually Perform

Three vendors dominate the AI cybersecurity conversation: Darktrace, CrowdStrike Falcon, and CylancePROTECT. Each takes a different technical approach that matters more than marketing materials suggest.

Darktrace uses unsupervised machine learning to create what it calls an "Enterprise Immune System." The platform learns normal behavior for every user, device, and network connection without predefined rules. When Thoma Bravo acquired Darktrace for $5.3 billion in August 2025, the deal validated the company's self-learning approach.

Darktrace excels at spotting insider threats and zero-day exploits because it doesn't rely on signature databases. A legitimate employee account behaving unusually gets flagged even if no malware is present. The weakness shows up in complex environments where "normal" behavior varies widely. A developer downloading large codebases, a data analyst running queries at odd hours, or a designer working with huge media files all generate alerts that security teams must manually investigate.

CrowdStrike Falcon takes an endpoint-focused approach, processing data from millions of endpoints using its threat graph, which visualizes relationships between indicators of compromise. CrowdStrike's market capitalization reached $58 billion by 2021, making it the most valuable pure-play cybersecurity company.

Falcon's strength lies in forensic depth and threat hunting capabilities. When an attack occurs, security teams can trace exactly what happened, which systems were compromised, and how the threat propagated. The limitation emerges in distributed environments mixing cloud, on-premises, and IoT devices where endpoint agents become complex to manage.

CylancePROTECT uses predictive AI to block malware before execution, analyzing file attributes and behavioral patterns to predict malicious intent without signature updates. Founded in 2012 and sold to BlackBerry for $1.4 billion in 2018, the platform enables detection of zero-day threats, but the approach generates more false positives than behavioral systems.

Platform Comparison: Features and Pricing

| Feature | Darktrace | CrowdStrike Falcon | CylancePROTECT |

|---|---|---|---|

| Detection Method | Unsupervised ML, behavioral | Threat graph, indicators | Predictive AI, file analysis |

| Best For | Insider threats, zero-days | Forensics, threat hunting | Zero-day malware blocking |

| Starting Price | ~$40,000/year | $8/endpoint/month | $25-45/endpoint/year |

| Enterprise Cost | $200K-$500K+/year | $96K-$300K/year (1000 endpoints) | $25K-$45K/year (1000 endpoints) |

| False Positive Rate | Medium (varies by environment) | Low-Medium | Medium-High |

| Setup Complexity | High (requires tuning) | Medium | Medium-High (constant tuning) |

Source: Darktrace vs CrowdStrike Comparison - AI Flow Review

The False Positive Problem Nobody Talks About

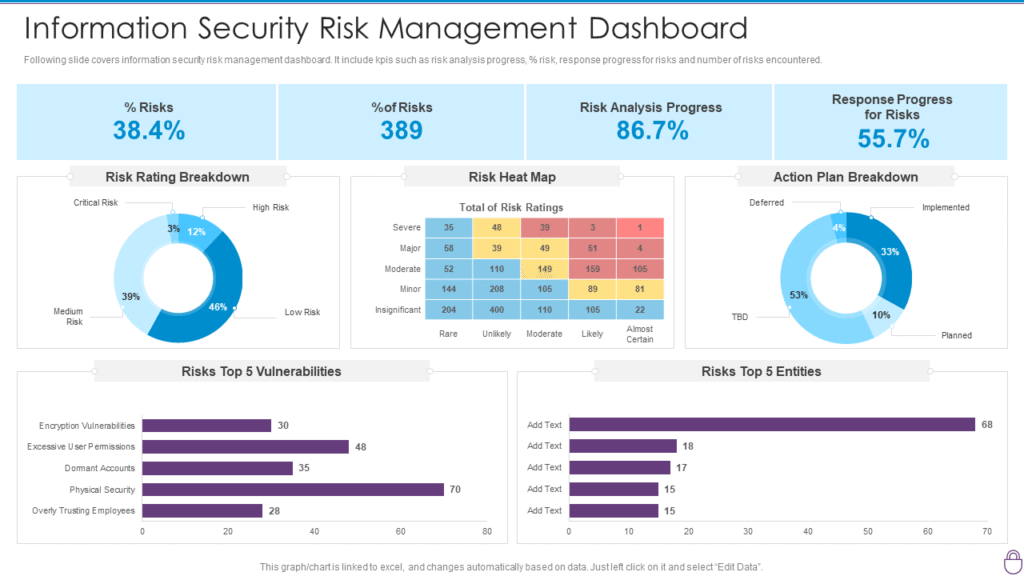

Figure 2: Risk analysis dashboards help visualize security posture, but false positives from AI systems create alert fatigue

58% of security professionals report that investigating false positives takes longer than fixing actual threats. 72% say false positives negatively impact team productivity, and over half of security teams now ignore alerts that frequently prove benign.

This creates a dangerous paradox. Organizations generate more security data than ever before (79 zettabytes in 2025), yet genuine threats slip through while analysts waste time investigating harmless activities. Studies indicate up to 68% of critical vulnerabilities remain unresolved due to resource constraints and analyst overwhelm.

The root cause traces to training data quality. AI algorithms are only as good as the data used to train them. Cybersecurity professionals must utilize current, unbiased datasets of malicious code, malware, and anomalies. This represents a heavy lift that still doesn't guarantee accurate results.

Behavioral analytics tools like Darktrace reduce false positives compared to signature-based systems by learning what's normal for specific organizations. But "normal" in modern enterprises encompasses developers using GitHub Copilot, data scientists running ML models, and employees accessing cloud apps from coffee shops. Each legitimate use case trains the AI differently, meaning a system tuned for a financial services firm performs poorly when deployed at a tech startup.

The economic impact proves substantial. Many investigations take 10 to 40 minutes per alert. A security team handling 100 daily alerts (modest for mid-sized enterprises) spends 17 to 67 hours investigating, with perhaps 10% representing genuine threats. This alert fatigue contributes directly to analyst burnout and turns cybersecurity from strategic defense into reactive firefighting.

Attackers Use the Same Tools

The most unsettling aspect of AI in cybersecurity isn't technical limitations but the arms race dynamics. Attackers gained access to the same AI capabilities defenders use, leveling advantages enterprises paid millions to obtain.

Generative AI enables threat actors with limited technical skills to craft convincing phishing emails that adapt to recipients' communication styles. AI/ML tool usage skyrocketed by 594.82%, rising from 521 million AI-driven transactions in April 2023 to 3.1 billion monthly by January 2024. Much of this growth came from legitimate business use, but the barrier to entry dropped for attackers too.

Adversarial Attacks

Adversarial attacks represent the most sophisticated threat. Bad actors manipulate AI systems by feeding them poisoned data or crafting scenarios that trigger false positives. These attacks exploit specific vulnerabilities to bypass detection. A subtle manipulation imperceptible to humans causes the model to misclassify malicious data as benign.

Data poisoning impacts seem subtle but gradually sabotage model performance. Attackers input incorrect data into training datasets, modifying AI functionality to create false predictions. Model inversion attacks extract information about training data by repeatedly querying systems and examining outputs, potentially leaking proprietary information or individual user data.

According to SentinelOne's research on AI Security Risks, these adversarial techniques represent emerging threats that most organizations are unprepared for.

Research from BlackBerry revealed 75% of organizations worldwide support bans on ChatGPT and other generative AI applications in the workplace, citing concerns that these apps pose cybersecurity threats.

The practical implication: AI doesn't provide sustainable advantage in the attacker-defender dynamic. Both sides gain capability simultaneously, shifting the battle to who implements faster and adapts quicker. Organizations expecting AI to solve security challenges independently will find themselves disappointed when attackers leverage identical tools.

Cost Reality Check: Who Actually Benefits

Implementation costs represent a significant barrier that vendor marketing downplays. Getting started with AI cybersecurity requires not just licensing fees but also infrastructure investment, dataset preparation, and internal expertise.

Darktrace's pricing typically starts around $40,000 annually for small deployments, scaling based on network size and features. Enterprise implementations run $200,000 to $500,000 annually.

CrowdStrike Falcon pricing begins around $8 per endpoint monthly for basic protection, reaching $25+ per endpoint for complete extended detection and response (XDR) capabilities. An organization with 1,000 endpoints pays $96,000 to $300,000 annually.

These figures exclude deployment costs, ongoing tuning, and staff training. Organizations require access to quality datasets to train systems and internal expertise to build tailored solutions. Smaller enterprises without these resources find ROI calculations unfavorable compared to managed security service providers offering similar protection at fixed monthly costs.

Who Benefits?

Large Enterprises (1,000+ employees): The math works differently for large enterprises with distributed operations, regulatory compliance requirements, and skilled security teams. A financial institution processing millions of transactions daily generates breach costs measured in tens of millions. Organizations with fully deployed AI threat detection systems contained breaches within 214 days versus 322 days for those using legacy systems. That 108-day difference translates directly to reduced damage for companies facing sophisticated persistent threats.

Mid-sized Organizations (50-500 employees): These businesses often achieve better results combining traditional endpoint protection with managed detection and response services that incorporate AI without requiring internal expertise. This approach provides AI benefits without implementation complexity or internal expertise requirements.

Startups and Small Businesses (under 50 employees): These rarely justify dedicated AI cybersecurity investments. Basic endpoint protection, network segmentation, multi-factor authentication, and security awareness training provide more cost-effective protection. The estimated $10 trillion in cybercrime costs for 2025 disproportionately impacts larger organizations with valuable data, not small businesses running standard operations.

Real-World Deployment Considerations

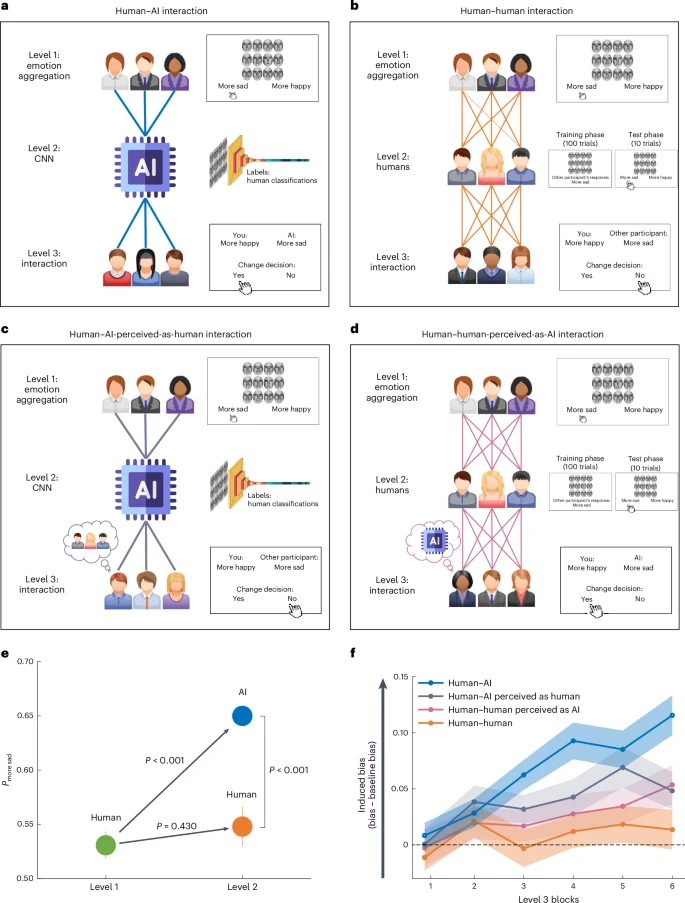

Figure 3: Human-AI collaboration models show how AI augments human decision-making in security operations

Integration with existing infrastructure matters more than isolated capabilities. Almost 90% of security professionals prefer platform approaches to collections of individual security tools. Organizations already using Microsoft security tools benefit from Security Copilot integration across Azure services. Those standardized on Palo Alto firewalls gain more from Cortex XDR than best-of-breed alternatives requiring separate management.

Cloud Security represents the fastest-growing segment. Organizations migrating operations to cloud platforms face expanded attack surfaces. Cloud security tools using AI for continuous monitoring and automated response address this exposure, though implementation remains challenging across multi-cloud environments using AWS, Azure, and Google Cloud simultaneously.

Industry-Specific Considerations:

- Financial services and healthcare face stringent regulatory requirements that favor established vendors with compliance certifications

- Manufacturing and operational technology environments need specialized protection for industrial control systems

- Government agencies require specific compliance certifications and data sovereignty protections

The Human Factor ultimately determines success or failure. AI reduces workload for skilled analysts by filtering noise and prioritizing threats. But organizations expecting AI to replace security staff or compensate for inadequate training face disappointment. Human security professionals remain essential for training models, interpreting complex results, and making judgment calls machines can't handle.

What Nobody Tells You About AI Cybersecurity

Transparency and Explainability Issues

AI decision-making processes remain opaque, making it difficult to understand why events were flagged or how attacks were detected. This lack of explainability makes troubleshooting and system improvement challenging, particularly in regulated industries requiring audit trails.

Bias and Discrimination

AI algorithms inherit biases from source datasets, potentially leading to unfairly targeting certain user groups or overlooking threats from others. Complete objectivity proves nearly impossible when historical data reflects human prejudices.

Resource and Computational Overhead

AI algorithms need significant processing power and storage capacity to analyze large data volumes. Organizations must evaluate whether on-premises infrastructure can handle these demands or whether cloud-based solutions make more sense despite data sovereignty concerns.

The Skills Gap

Almost one-third of organizations need cybersecurity vendors to explain how AI is being used in offered solutions. This knowledge deficit leaves organizations unable to properly evaluate competing products or optimize deployed systems.

Vendor Lock-In

AI systems trained on organization-specific data become deeply embedded in security operations. Switching vendors means retraining new systems from scratch, losing institutional knowledge accumulated over years. This creates significant switching costs that vendors understand and price accordingly.

The Verdict: When AI Makes Sense

AI in cybersecurity delivers measurable benefits for specific use cases but doesn't represent a universal solution.

Large enterprises with mature security operations, regulatory compliance requirements, and skilled analyst teams gain genuine advantage. AI improves threat detection by 60% in these environments, reduces investigation time from hours to minutes, and enables predictive defense strategies.

Mid-sized organizations achieve better ROI through managed services incorporating AI rather than deploying platforms directly. This approach provides AI benefits without implementation complexity or internal expertise requirements.

Small businesses and startups rarely justify dedicated AI cybersecurity investments. Resources directed toward fundamental security hygiene (endpoint protection, network segmentation, employee training, regular patching) provide more cost-effective protection. The sophistication level targeting these organizations doesn't require AI-powered defense.

The technology will continue evolving rapidly. Gartner predicts that by 2028, multi-agent AI in threat detection and incident response will increase from 5% to 70% of AI applications, mainly assisting staff rather than replacing them. This shift toward augmentation over automation reflects industry recognition that human judgment remains irreplaceable.

Organizations evaluating AI cybersecurity should focus less on vendor marketing claims and more on specific operational needs, existing tool ecosystems, and realistic capability expectations. AI represents powerful technology when properly deployed, but the gap between promise and reality remains wider than most vendors acknowledge.

Frequently Asked Questions

Q: How much does AI cybersecurity cost compared to traditional security tools?

A: AI cybersecurity platforms typically cost 3-5x more than traditional tools. Enterprise deployments range from $100,000 to $500,000+ annually for platforms like Darktrace or CrowdStrike Falcon XDR, versus $20,000-$75,000 for traditional endpoint protection and SIEM systems. However, organizations with fully deployed AI systems contain breaches 108 days faster on average, potentially offsetting higher upfront costs through reduced damage.

Q: Can AI completely replace human security analysts?

A: No. AI reduces workload by filtering alerts and detecting patterns, but human analysts remain essential for training models, investigating complex threats, making judgment calls, and handling situations requiring business context. Studies show AI works best augmenting skilled teams rather than replacing them. Organizations expecting AI to eliminate security staff typically experience worse outcomes than those using AI to enhance analyst productivity.

Q: Do smaller businesses need AI cybersecurity tools?

A: Rarely. Small businesses (under 50 employees) typically achieve better protection through fundamental security practices at lower cost. Endpoint protection, network segmentation, multi-factor authentication, employee training, and regular patching provide more cost-effective defense. AI platforms require significant investment and expertise that smaller organizations lack. Managed security services offering AI-powered monitoring represent better options when advanced capabilities become necessary.

Q: How do I know if a cybersecurity vendor's AI claims are legitimate?

A: Request specific performance metrics with independent verification. Ask for false positive rates, mean time to detect (MTTD), mean time to respond (MTTR), and breach containment statistics from third-party testing. Vendors offering only marketing claims without data should raise red flags. Check whether the platform uses actual machine learning (requiring training on your data) versus rules-based detection marketed as "AI-powered."

Q: What's the biggest risk of implementing AI cybersecurity?

A: False confidence leading to reduced vigilance. Organizations deploying AI often decrease other security measures, assuming the technology provides complete protection. In reality, AI generates false positives requiring human investigation, misses novel attack methods, and can be manipulated through adversarial techniques. The biggest danger comes from treating AI as a silver bullet rather than one tool in a comprehensive security strategy requiring ongoing attention.

Sources and Further Reading

- Artificial Intelligence in Cybersecurity Market Report 2025 - Market size data showing growth from $23.12B to $28.51B in 2025

- AI Cybersecurity Solutions Market Analysis - Mordor Intelligence - Vendor market share, acquisition data, platform comparison details

- AI In Cybersecurity Statistics 2025 - ElectroIQ - Performance metrics including 98% phishing detection rate, 150B daily events monitored

- How Effective Is AI for Cybersecurity Teams? - JumpCloud - False positive statistics, investigation time data, breach containment metrics

- Top AI Security Risks in 2025 - SentinelOne - Adversarial attack methods, data poisoning techniques, model inversion threats

- AI in Cybersecurity Trends - Lakera - AI/ML tool usage growth, investment patterns, adoption statistics

- (ISC)² 2024 Cybersecurity Workforce Study - Workforce burnout, staffing challenges, industry trends

- IBM 2024 Data Breach Report - Breach containment metrics, cost analysis, AI impact on detection time