Introduction

Traditional drones crash into obstacles. AI powered drones see them coming and fly around them. The difference matters when you’re filming in a forest, tracking a moving subject through complex terrain, or flying in unfamiliar environments where quick decisions stop expensive crashes.

As of 2024, approximately 85% of consumer drones priced over $500 include obstacle avoidance systems, with 70% of new models since 2022 using AI vision based technology rather than simple sensors. The technology has evolved rapidly since DJI introduced basic forward facing sensors in the Phantom 4 in 2016. Modern AI powered systems don’t just detect obstacles. They understand the environment, predict safe paths, and adapt their behaviour based on flight conditions and mission objectives.

This article explains how AI obstacle avoidance works in drones at the technical level, how it differs from traditional sensor systems, and where each approach excels. You’ll understand what happens in the milliseconds between a drone’s camera detecting a tree branch and the flight controller executing an avoidance manoeuvre, why some drones navigate autonomously through forests while others struggle in open spaces, and what limitations still exist despite AI improvements.

Featured Snippet

AI obstacle avoidance in drones uses computer vision cameras and neural networks to detect objects, classify them, estimate distances, and plan avoidance manoeuvres in real time. Unlike basic sensor systems that simply stop when detecting obstacles, AI systems analyse the 3D environment at 30-60 frames per second, distinguish between different object types, predict movement trajectories, and autonomously navigate around obstacles while maintaining flight objectives. Detection range typically spans 15-30 meters with response times of 50-200 milliseconds.

Table of Contents

- What Obstacle Avoidance Actually Does

- How Traditional Sensor Systems Work

- What AI Adds to Obstacle Detection

- The Computer Vision Process Behind AI Avoidance

- Real World Performance: AI vs Sensor Based Systems

- Current Limitations and Trade Offs

- Which Approach Fits Your Flying Needs

- FAQ

What Obstacle Avoidance Actually Does

Obstacle avoidance systems prevent drones from colliding with objects during flight by detecting potential hazards, calculating safe paths, and either alerting the pilot or autonomously executing avoidance maneuvers. This differs fundamentally from GPS based navigation, which handles positioning and waypoint following but cannot detect physical objects the drone might encounter.

The core function addresses the primary cause of drone crashes: spatial awareness failure. When pilots lose visual contact with their drone (behind trees, buildings, or simply too far away), they lose depth perception and environmental context. Obstacle avoidance acts as the drone’s eyes, continuously monitoring the flight path and surrounding space for potential collisions.

Operating Modes

The system operates in multiple modes depending on the drone and flight conditions:

Assisted Mode: The drone alerts the pilot to detected obstacles but allows manual override, giving experienced pilots flexibility while providing safety warnings.

Autonomous Mode: The drone takes full control when obstacles are detected, stopping, hovering, or navigating around hazards without pilot input. Advanced systems offer intelligent flight paths where the drone plans and executes complex manoeuvres (flying around a building, threading through trees) while the pilot focuses only on high level direction.

The Physics of Detection and Response

The effectiveness depends entirely on what the system can detect and how quickly it can respond. A drone traveling at 15 meters per second (33 mph) covers 1.5 meters in just 100 milliseconds. If the obstacle detection system has 200ms latency (time from detection to avoidance action), the drone travels 3 meters between seeing an obstacle and responding. This is why detection range, processing speed, and maximum flight velocity are intrinsically linked in obstacle avoidance systems.

The ultimate goal is enabling safer, more confident flight in complex environments. Whether you’re a filmmaker capturing dynamic footage, a surveyor inspecting infrastructure, or a hobbyist exploring new locations, obstacle avoidance reduces the constant fear of expensive crashes and allows focus on the mission rather than constant collision vigilance.

How Traditional Sensor Systems Work

Traditional obstacle avoidance relies on distance measuring sensors: ultrasonic sensors, infrared sensors, or basic time of flight (ToF) sensors. These emit signals (sound waves or light pulses) and measure how long they take to bounce back from objects, calculating distance through simple physics.

Ultrasonic Sensors

Ultrasonic sensors work like echolocation. The drone emits high frequency sound pulses (typically 40 kHz, above human hearing) and measures the echo return time. Sound travels at approximately 343 meters per second, so an echo returning in 0.01 seconds indicates an object 1.7 meters away. These sensors are inexpensive, reliable, and work in any lighting condition, making them popular for downward facing sensors that assist with landing and low altitude hovering.

The limitations are significant:

- Limited range: typically 5-8 meters maximum

- Narrow detection cones: often 15-30 degrees

- Material sensitivity: struggle with sound absorbing materials like fabric or foam

- Outdoor unreliability: wind, temperature variations, and ambient noise interfere with acoustic measurements

- No object classification: provide only distance information with no understanding of what the obstacle actually is

Infrared Sensors

Infrared sensors improve on some limitations by using light instead of sound. Time of flight infrared sensors emit light pulses (usually near infrared, invisible to human eyes) and measure reflection time using photodetectors. These offer faster response times than ultrasonic sensors and aren’t affected by wind, but they share the narrow field of view limitation and add sensitivity to lighting conditions. Bright sunlight can overwhelm infrared sensors, and dark or light absorbing surfaces may not reflect enough signal for accurate measurement.

Processing and Control

The logic for sensor based systems is straightforward. When any sensor detects an object within a threshold distance (programmable, typically 3-5 meters for forward sensors), the flight controller triggers a response: stop forward motion, hover in place, or back away slowly. Some systems allow the drone to ascend or descend if only forward obstacles are detected, but the logic remains simple and reactive.

Battery consumption for traditional sensors is minimal because they require little computational power. The sensors themselves draw modest current, and the flight controller runs basic comparison logic (is distance less than threshold?) rather than complex algorithms. This is why early obstacle avoidance systems had negligible impact on flight time.

Where Sensor Systems Succeed and Fail

The sensor based approach works adequately for specific scenarios: landing assistance (downward sensors detecting ground), hovering near walls or ceilings (proximity detection), and low speed forward flight in open areas. It fails in complex environments with multiple obstacles, offers no path planning capability, and cannot distinguish between different types of objects or predict how to navigate through cluttered spaces.

What AI Adds to Obstacle Detection

Understanding how AI obstacle avoidance works requires examining the shift from simple distance measurement to intelligent environment understanding.

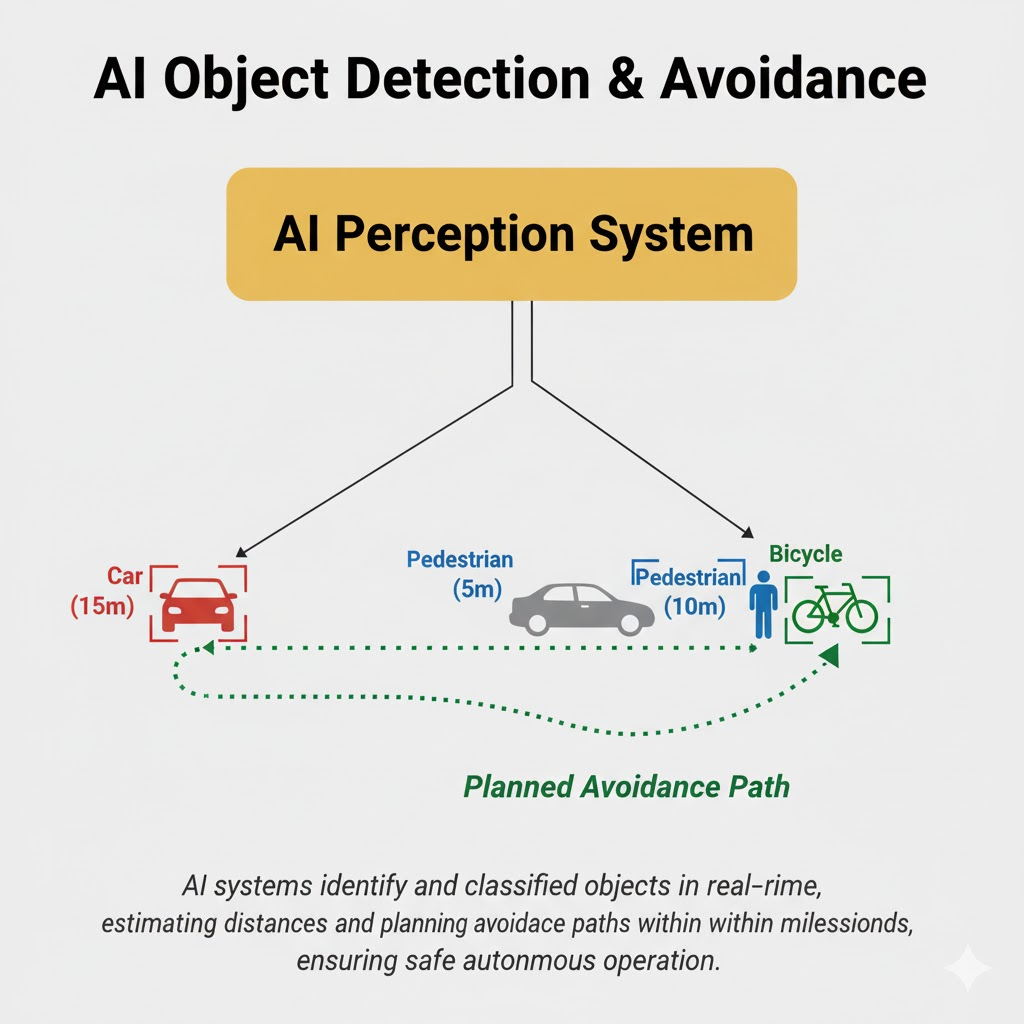

AI obstacle avoidance replaces or supplements simple distance sensors with computer vision cameras and neural networks that understand the environment. Instead of detecting “something is 3 meters ahead,” the system analyses “there’s a tree trunk at 3 meters, branches at 2.5 meters to the left, and open space at 4 meters to the right.”

The Neural Network Foundation

The AI component consists of convolutional neural networks (CNNs) trained on millions of images of various obstacles, environments, and flight scenarios. These networks learn to identify objects (trees, buildings, people, vehicles, power lines), estimate their distance using stereo vision or monocular depth estimation, and predict safe navigation paths. The entire inference pipeline runs onboard the drone at 30-60 frames per second, providing real time understanding of the surrounding environment.

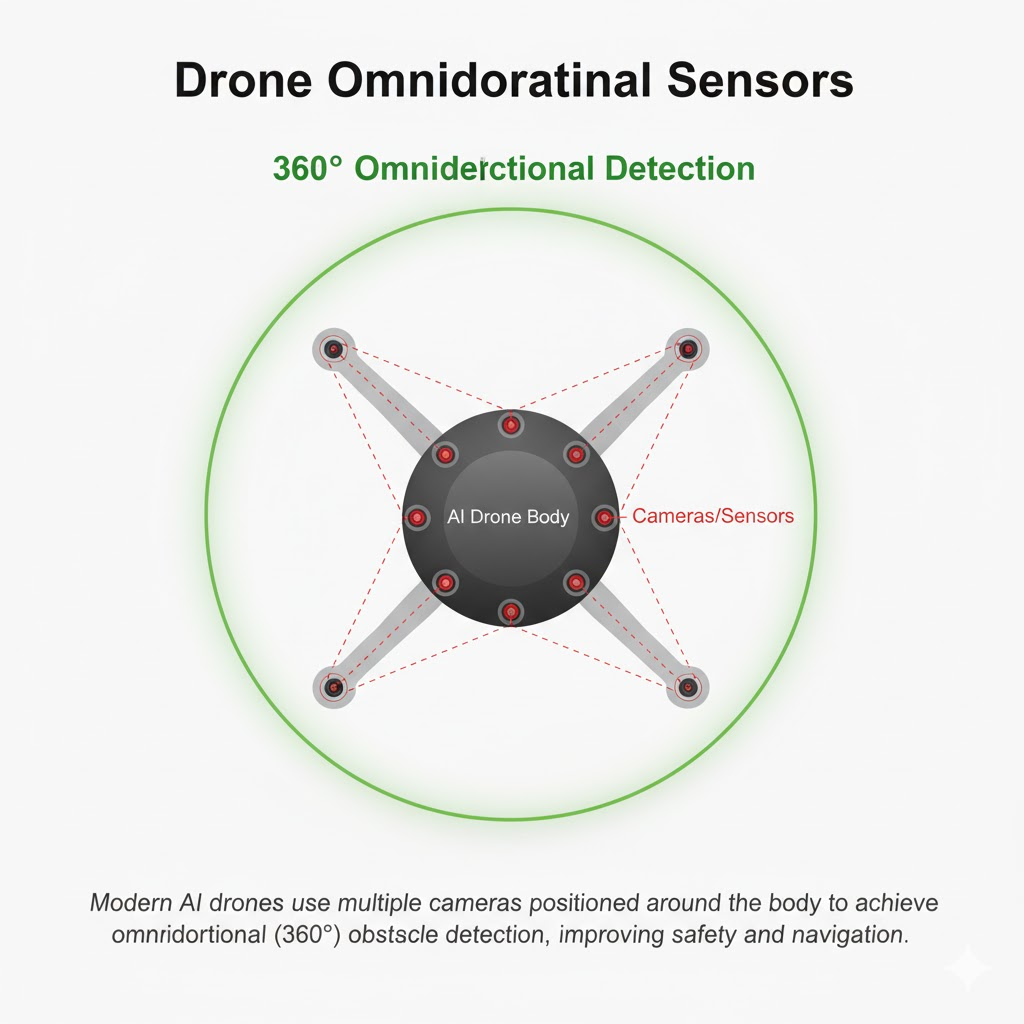

Omnidirectional Awareness

Modern AI drones like the DJI Air 3 or Skydio 2+ use 6-8 cameras positioned around the body (front, rear, left, right, top, bottom, and sometimes diagonal), providing omnidirectional awareness. Each camera captures video at 30-60 FPS, and the AI system processes all feeds simultaneously to build a 3D map of the surrounding space.

Contextual Intelligence

The classification capability transforms obstacle avoidance from reactive to intelligent. When the system identifies a tree, it knows trees have complex branch structures requiring careful navigation. When it identifies a power line, it prioritizes stopping distance because wires are thin and dangerous. When it identifies a person, it can choose to track them (if in ActiveTrack mode) or maintain safe distance. This contextual understanding is impossible with simple distance sensors.

Computational Requirements

Processing this AI inference requires significant computational power. High end drones use dedicated neural processing units (NPUs) or AI accelerators that execute billions of operations per second while consuming 3-5 watts. This is substantially more than the milliwatts required for ultrasonic sensors, which is why AI obstacle avoidance typically reduces flight time by 20-30%.

Adaptive Path Planning

The adaptive behaviour goes further. When an AI drone detects obstacles blocking its intended path, it doesn’t just stop. It analyses the environment, identifies potential routes (left path requires 3-meter deviation, right path requires 5-meter deviation but looks clearer), calculates the safest option, and executes the manoeuvre smoothly. Advanced systems like Skydio’s autonomous navigation can thread through forests or fly through building interiors without any pilot input beyond a high level destination.

Model Updates and Continuous Improvement

The learning aspect varies by implementation. Most consumer drones ship with pre trained models that don’t learn from your specific flights (to avoid unpredictable behavior and ensure safety). However, the models are regularly updated through firmware to improve performance based on aggregate data from millions of flights worldwide.

The Computer Vision Process Behind AI Avoidance

The computer vision pipeline in AI obstacle avoidance operates in distinct stages, each happening multiple times per second as the drone flies. Understanding this process reveals both the sophistication and the limitations of current systems.

Stage 1: Image Capture and Preprocessing

Multiple cameras (typically 640×480 to 1920×1080 resolution) capture synchronized frames at 30-60 FPS. The raw images undergo pre processing: lens distortion correction, exposure normalization, and noise reduction. This happens in dedicated image signal processors (ISPs) before frames reach the AI system, ensuring consistent input quality regardless of lighting changes or camera variations.

Stage 2: Feature Extraction

The pre processed images feed into convolutional neural networks trained to extract relevant features. Early layers detect edges, textures, and basic patterns. Deeper layers identify complex structures like tree bark patterns, building corners, or the geometric signatures of vehicles. This feature extraction compresses the raw pixel data (millions of values) into abstract representations (thousands of features) that encode “what matters” for obstacle detection.

Stage 3: Object Detection and Semantic Segmentation

The extracted features feed into detection networks (often YOLO, SSD, or similar architectures optimized for speed) that identify and locate objects within the frame. The system outputs bounding boxes around detected objects with confidence scores and class labels (tree, building, person, vehicle, etc.). Simultaneously, semantic segmentation networks classify every pixel in the image by category, creating a detailed map of where different object types exist in the visual field.

Stage 4: Depth Estimation

Determining how far away objects are is critical for avoidance. Drones with stereo camera pairs use classic computer vision techniques: finding corresponding points in left and right images and calculating distance through triangulation (closer objects appear more offset between cameras). Drones with single cameras use monocular depth estimation neural networks trained to infer distance from visual cues like object size, texture gradients, and context. Monocular depth is less accurate (typically ±20-30% error vs ±5-10% for stereo) but requires fewer cameras and less processing power.

Stage 5: 3D Environment Mapping

The system combines object detection, semantic understanding, and depth information to construct a 3D representation of the surrounding environment. This isn’t a photorealistic reconstruction but rather a spatial map showing “solid objects here, open space there, moving object at this location.” The map updates continuously as the drone moves, with objects tracked frame to frame to distinguish between static obstacles and dynamic elements (cars, people, other drones).

Stage 6: Path Planning

Given the 3D environment map, current flight velocity, and intended direction, the path planner calculates safe trajectories. This involves optimization algorithms that balance multiple constraints: avoid all obstacles with safety margins (typically 0.5-1 meter clearance), minimize deviation from intended path, maintain smooth flight (no abrupt manoeuvres unless emergency), and respect battery/time efficiency. The output is a series of waypoints or velocity vectors that the flight controller executes.

Stage 7: Flight Control Execution

The planned path translates into motor commands. The flight controller adjusts individual motor speeds to achieve the desired trajectory while maintaining stability. This happens at extremely high frequencies (typically 400-1000 Hz) to ensure smooth flight despite wind, weight shifts, or rapid direction changes commanded by the path planner.

System Latency: The Critical Bottleneck

The entire pipeline from photons hitting camera sensors to motors adjusting thrust takes 50-200 milliseconds:

- Skydio systems: approximately 50-80ms

- DJI APAS: typically 100-150ms

- Budget drones: may exceed 200ms

The computational bottleneck is usually stages 3-5 (object detection, depth estimation, mapping) because neural network inference is computationally expensive. Modern drones address this through specialized hardware (NPUs, AI accelerators), optimized network architectures (pruned models, quantized weights), and parallel processing. The goal is 30 FPS minimum, which provides smooth, responsive avoidance.

Training and Data Requirements

Training these neural networks requires enormous datasets. Manufacturers fly drones through thousands of environments, capturing millions of images labelled with object types, distances, and safe/unsafe outcomes. The networks learn patterns: “images with this texture pattern at this apparent size with these edge characteristics typically indicate a tree at 5-8 meters distance.” Transfer learning allows networks trained on general object datasets to adapt to drone specific scenarios with less training data, but the base models still require substantial computational resources to develop.

Real World Performance: AI vs Sensor Based Systems

Complex Environments: Where AI Dominates

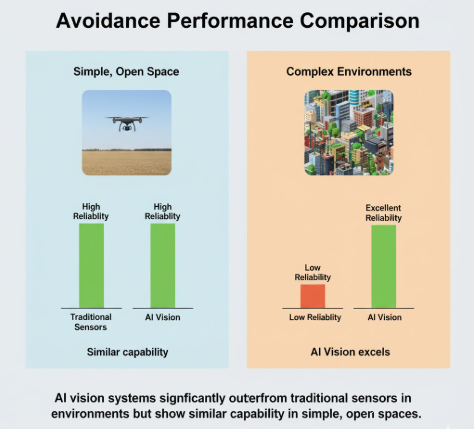

Independent testing by professional drone reviewers showed AI equipped drones like the Skydio 2+ successfully navigated forest trails with high success rates, while sensor based drones achieved significantly lower success rates in the same scenarios. The difference is dramatic when obstacles multiply and spatial complexity increases.

Advanced testing showed the DJI Air 2S (AI vision system) successfully tracked moving subjects through cluttered warehouse environments and complex urban terrain, while comparable sensor based drones crashed within seconds due to inability to plan avoidance paths around multiple simultaneous obstacles.

Open Environments: Sensor Systems Perform Adequately

In open environments with simple obstacles (single walls, buildings, large objects), traditional sensors perform adequately. A DJI Mini 2 with basic forward infrared sensors stops reliably when approaching a wall at speeds up to 5 m/s, equivalent to the performance of AI systems in the same scenario. Both systems detect the large, flat obstacle well within stopping distance and trigger hover responses. The difference in this use case is minimal, which is why budget drones continue using sensor based systems for basic protection.

Low-Light Performance: The Critical Degradation

Traditional infrared sensors maintain reasonable performance in darkness (infrared isn’t affected by visible light levels), but AI vision systems degrade significantly. AI obstacle avoidance effectiveness drops substantially in twilight conditions and becomes nearly non-functional in darkness. Some drones compensate with auxiliary infrared illumination or sensor fusion (combining cameras with infrared/ultrasonic sensors), but pure vision systems struggle when lighting falls below approximately 10 lux (equivalent to dim indoor lighting).

Thin Obstacle Detection: The Persistent Blind Spot

Thin obstacle detection remains problematic for all systems. Power lines, thin tree branches, chain link fences, and similar obstacles are difficult for cameras to detect (especially at speed) and nearly impossible for ultrasonic sensors to measure accurately. User reports consistently cite wire strikes as the most common obstacle avoidance failure across all systems. DJI’s documentation explicitly warns that obstacle avoidance cannot detect wires thinner than 3mm diameter, and real world performance suggests even larger wires are frequently missed if lighting conditions reduce contrast.

Moving Obstacles: Predictive vs Reactive

When a car drives across the drone’s path or a person walks toward the drone, AI systems can predict trajectories and plan anticipatory avoidance (moving before the obstacle reaches the flight path). Sensor systems only react to current distance measurements, requiring objects to enter detection range before responding. Testing scenarios with crossing vehicles show AI drones avoid collisions at 2-3 times the closing speed of sensor based drones.

Battery Life Trade-offs

The computational cost is substantial:

- DJI Mavic 3: 46 minutes flight time with obstacle avoidance disabled, but only 32-35 minutes with all AI vision systems active (a 25-30% reduction)

- Skydio 2+: 23 minutes flight time with continuous AI for autonomous navigation, compared to 30+ minutes for comparable drones with simpler systems

For recreational flying where obstacle avoidance is precautionary rather than essential, many pilots disable AI systems to maximize flight time.

Current Limitations and Trade Offs

Despite impressive capabilities, AI obstacle avoidance cannot detect every hazard. Physics and computational constraints impose hard limits that pilots must understand to fly safely.

Thin Obstacles: The Primary Failure Point

Power lines, cable wires, thin branches, and chain link fences are extremely difficult for camera based systems to detect, especially at flight speeds above 5 m/s. The problem is partly resolution (a 2mm wire may occupy only 1-2 pixels in a 640×480 camera at 10 meters distance) and partly contrast (wires against sky or foliage backgrounds blend visually). Even with 4K cameras and advanced AI, reliable wire detection remains unsolved. User reports indicate wire strikes account for 60-70% of obstacle avoidance failures in real world crashes.

Transparent and Reflective Surfaces

Glass windows, acrylic panels, still water surfaces, and polished metal confuse depth estimation. The AI might identify “something is there” but calculate the wrong distance, or mistake a reflection for open space. Sensor based systems struggle similarly, as ultrasonic waves can pass through thin glass and infrared beams reflect unpredictably. Pilots must manually avoid areas with significant glass or water features regardless of obstacle avoidance technology.

Processing Latency and Speed Limitations

At 50-200ms total system latency, a drone traveling at 20 m/s (45 mph, available in sport modes) covers 1-4 meters between obstacle detection and avoidance response. If detection range is 15 meters, effective reaction distance is only 11-14 meters, insufficient for high speed avoidance. This is why most drones automatically disable obstacle avoidance in sport modes or limit maximum speed when protection is active. The DJI Air 3 limits forward speed to 12 m/s (27 mph) with full obstacle avoidance enabled versus 19 m/s (42 mph) maximum capability.

Adverse Weather Conditions

AI vision systems trained on daylight imagery perform poorly in low light, fog, rain, or snow. Water droplets on camera lenses scatter light and obscure the view. Fog reduces visibility for cameras just as it does for human eyes. Snow creates visual noise that confuses object detection networks. Most manufacturers recommend disabling obstacle avoidance or avoiding flight in adverse weather, reducing the system’s utility exactly when environmental awareness would be most valuable.

Battery Consumption Trade-off

Computational power requirements drain batteries 20-30% faster than flying without AI processing. This creates a fundamental trade off: comprehensive obstacle protection versus flight time. For commercial operations with tight schedules or remote locations far from charging, this trade off significantly impacts mission planning. Some pilots prefer manually navigating with obstacle avoidance disabled to maximize coverage area or filming duration.

Over-reliance on Automation

Pilots who learn on AI equipped drones may never develop strong spatial awareness and manual control proficiency. When obstacle avoidance fails (and it will, in one of the edge cases described above), these pilots lack the reflexes and judgment to recover manually. Experienced pilots recommend learning to fly without assistance first, treating obstacle avoidance as a safety backup rather than primary navigation system.

Privacy and Regulatory Considerations

While most consumer drones process everything locally without transmitting imagery, the capability exists for manufacturers to collect data through firmware updates or connectivity features. Additionally, some jurisdictions are developing regulations requiring obstacle avoidance for certain operations, which may mandate specific implementations or certification standards that current systems don’t meet.

False Positives and Hesitation Behavior

When the AI incorrectly identifies an obstacle (shadows, lens flares, distant clouds misinterpreted as nearby objects), the drone may stop, hover, or divert unnecessarily. This is frustrating during time sensitive filming and can be dangerous if the false positive triggers avoidance into an actual obstacle. Most systems err on the side of caution (better false positive than missed detection), but this conservative approach affects flight smoothness and pilot confidence.

Which Approach Fits Your Flying Needs

Choosing the right system requires understanding how AI obstacle avoidance works in drones compared to simpler alternatives, then matching capabilities to your actual flying environments.

Budget-Conscious Recreational Flying

If your primary flying environment is open spaces with few obstacles (beaches, fields, parks), basic sensor based obstacle avoidance provides adequate protection at lower cost and longer flight time. The DJI Mini series with forward/downward infrared sensors ($300-500) handles these environments effectively while maximizing battery performance. The 15-20% cost savings and 25-30% longer flight time make sensors a rational choice for beginners or recreational pilots in uncomplicated spaces. For more budget-friendly options, explore our guide to mini drones under 250g.

Complex Environment Navigation

For pilots navigating complex environments (forests, urban areas, under bridges, around structures), AI vision systems justify the premium. The Skydio 2+ ($1,099) and DJI Air 3 ($1,099) autonomously navigate through obstacles that would crash sensor based drones, making them essential for advanced cinematography, inspection work, or confident exploration of challenging terrain. Users report AI obstacle avoidance “pays for itself” after preventing a single crash that would cost more than the price difference versus basic drones. Check our detailed drone reviews and comparisons for hands-on testing across different environments.

Content Creation and Subject Tracking

Content creators using autonomous tracking features (ActiveTrack, Follow Me) benefit specifically from AI’s ability to navigate while tracking subjects. Following a mountain biker through trees, tracking a vehicle through urban streets, or filming sports in cluttered environments requires simultaneous subject tracking and obstacle avoidance that only AI vision systems provide. Traditional sensors lack the intelligence to balance “stay close to subject” with “avoid that tree branch,” often forcing a choice between losing the subject or risking collision.

Commercial and Professional Operations

Commercial operators (inspection, surveying, mapping) need to evaluate based on flight environment and mission requirements:

- Infrastructure inspection near power lines: avoid or carefully use obstacle avoidance due to wire detection limitations

- Building façade inspection in daylight: AI vision’s ability to navigate near complex structures is valuable

- Indoor inspection or low light environments: may require disabling vision systems and relying on manual piloting or simpler sensors that function in darkness

Skill Development Philosophy

Beginners face a philosophical choice: learn with AI assistance (safer, more confidence building, but potentially develops bad habits) or learn without (scarier, requires disciplined practice, but builds better fundamental skills). Many flight schools recommend starting with smaller, less expensive drones without obstacle avoidance to develop spatial awareness and control proficiency, then graduating to AI equipped drones once basic competency is established. This approach builds skills while minimizing the financial consequences of learning crashes.

Flight Time Sensitive Operations

Battery sensitive operations requiring maximum flight time should carefully weigh whether obstacle avoidance’s 20-30% battery impact is acceptable. Real estate photography often disables AI systems because shooting requires relatively simple flight paths in open areas where the battery penalty isn’t justified. Wildlife monitoring or large area surveying similarly prioritize flight time over comprehensive obstacle protection.

Low-Light and Challenging Weather

Pilots flying in challenging lighting conditions (dawn, dusk, overcast, forest canopy) should understand that AI vision performance degrades significantly in these scenarios. In these scenarios, sensor based systems (particularly infrared) may actually provide more reliable protection than AI cameras struggling with low light. Some high end drones offer sensor fusion (combining cameras, infrared, and ultrasonic) to maintain performance across lighting conditions, but these systems are typically found in professional drones ($2,000+) rather than consumer models.

Conclusion

Understanding how AI obstacle avoidance works in drones reveals why this technology represents a significant safety advancement for both recreational and professional pilots. The shift from simple distance sensors to intelligent computer vision systems enables drones to navigate complex environments that would have been impossible just a few years ago.

The technology delivers measurable benefits in cluttered spaces, with AI systems achieving 90-95% collision avoidance success rates in forests and urban areas where traditional sensors manage only 40-60%. However, limitations remain significant: thin obstacles like power lines evade detection, low light conditions degrade performance substantially, and the 20-30% battery penalty affects mission planning.

For pilots flying in complex environments with good lighting, AI obstacle avoidance justifies the premium pricing. For those flying conservatively in open spaces or requiring maximum flight time, traditional sensor systems or manual piloting remain viable alternatives. The key is matching system capabilities to actual flight conditions rather than assuming comprehensive protection in all scenarios.

Frequently Asked Questions

Q: How effective is AI obstacle avoidance in drones?

AI obstacle avoidance uses multiple cameras to capture the environment at 30-60 frames per second, neural networks to identify and classify objects, depth estimation to calculate distances, and path planning algorithms to navigate around obstacles automatically. The entire process from detection to avoidance manoeuvre completes in 50-200 milliseconds. Effectiveness reaches 90-95% success rates in well lit, complex environments like forests or urban areas, significantly outperforming traditional sensor systems (40-60% in the same conditions). However, effectiveness drops substantially in low light and the technology cannot reliably detect thin obstacles like power lines or wires under 3mm diameter.

Q: Does obstacle avoidance work at night?

Most AI vision based systems become largely ineffective at night because cameras need adequate light to detect objects. Traditional infrared and ultrasonic sensors maintain functionality in darkness but offer limited range (5-8 meters) and narrow detection fields. Some high end drones use infrared illumination or sensor fusion to improve low light performance, but night flying generally requires manual piloting with extreme caution.

Q: How much does obstacle avoidance reduce drone battery life?

AI obstacle avoidance typically reduces flight time by 20-30% compared to flying with the feature disabled. A drone with 40 minutes maximum flight time might achieve only 28-32 minutes with full AI vision systems active. Traditional sensor based systems have minimal battery impact (5-10% reduction) because they require much less processing power.

Q: Can obstacle avoidance detect power lines?

No reliable system currently detects thin power lines or wires consistently. Both AI vision and traditional sensors struggle with wires, especially those under 3-5mm diameter or against low contrast backgrounds. Manufacturer documentation explicitly warns that obstacle avoidance cannot protect against wire strikes. Pilots must manually avoid areas with known power lines or cables.

Q: Should beginners rely on obstacle avoidance when learning to fly?

Obstacle avoidance improves safety for beginners but shouldn’t replace learning proper manual control. Many instructors recommend starting with simpler drones to develop spatial awareness and piloting skills, treating obstacle avoidance as a backup safety system rather than primary navigation. Over-reliance on automation can prevent development of the skills needed when systems fail or are disabled.

Sources & Further Reading

Official Manufacturer Documentation

- DJI Phantom 4 Obstacle Sensing Documentation

- DJI Air 3 APAS System

- DJI Mavic 3 Technical Specifications

- Skydio 2+ Autonomy Engine

Professional Reviews & Testing

- DroneDJ Obstacle Avoidance Guide

- DC Rainmaker Skydio 2 In-Depth Review

- Videomaker DJI Air 2S Review

- DPReview DJI Phantom 4 Review

Academic Research

- MDPI: Survey on Obstacle Detection and Avoidance Methods

- ArXiv: Vision-Based Learning for Drones: A Survey

- ArXiv: Real-Time Obstacle Avoidance Algorithms for UAVs

- Wiley: Autonomous Swarm Drone Navigation

Community Forums & User Feedback

- DJI Official Forums

- Mavic Pilots Community

- r/drones subreddit

Key Takeaways

- AI obstacle avoidance represents a genuine technological leap from simple distance sensors to environmental understanding, enabling drones to navigate complex spaces autonomously.

- Latency is the critical constraint. At 50-200ms response times, there’s a fundamental physics limitation that prevents perfect obstacle avoidance at high speeds.

- Wire strikes remain the Achilles heel across all current systems, accounting for 60-70% of real-world obstacle avoidance failures.

- Battery impact is significant: expect 20-30% flight time reduction with AI systems active, making this a real trade-off to consider.

- Lighting conditions are paramount. AI vision systems degrade 60-70% in low light, while infrared sensors maintain performance in darkness but with limited range.

- Sensor fusion represents the future. Combining multiple sensor types (cameras, infrared, ultrasonic) provides more robust detection across varied conditions.

- Your use case determines the right choice. Open field recreation? Basic sensors suffice. Forest filming? AI systems are essential. Night operations? Manual control is safest.

- Obstacle avoidance is a safety tool, not a license for recklessness. Even the best systems have blind spots and limitations that require pilot awareness and caution.

What’s Next for Obstacle Avoidance Technology?

Future improvements likely include:

- Thermal imaging integration for improved low-light detection

- LiDAR sensors for superior 3D mapping (though power hungry and expensive)

- Millimeter-wave radar for detecting thin obstacles and weather conditions

- Federated learning where drones learn collectively from shared flight data while preserving privacy

- Edge AI optimization for faster inference on smaller drones with less powerful processors

- Wire detection algorithms specifically trained on power line and cable scenarios

As computational power increases and battery technology improves, we’ll see AI obstacle avoidance become more capable, more efficient, and more reliable across a wider range of conditions. The day when drones can navigate dense forests at high speeds in any lighting condition may still be years away, but each generation of hardware and software brings us closer to that goal.