Introduction

If you’ve heard both “explainable AI” and “AI governance” used interchangeably, you’re not alone—and that confusion is costing teams time and resources. Ask ten teams building AI systems whether they “have governance,” and half will say yes because they use explainability tools. The other half will say no because they don’t have documentation or risk processes. Both are mixing concepts that sound similar but solve very different problems.

Here’s the distinction that matters:

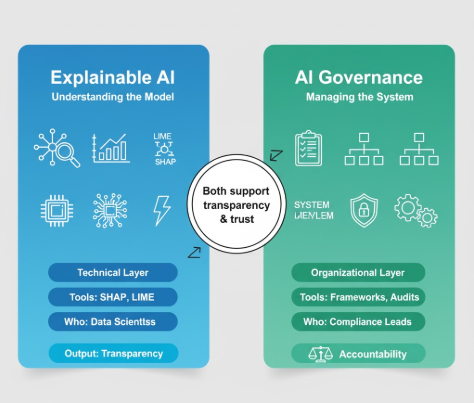

- Explainable AI (XAI) is a technical layer: tools and methods that help you understand how a model made a prediction.

- AI governance is an organizational layer: the rules, processes, and accountability structures that determine how AI is developed and overseen.

Confusing the two isn’t just a vocabulary problem. It leads to teams believing they’re compliant when they’re not, or adding governance overhead when what they really need is model transparency. With new regulations and industry frameworks taking shape, the distinction matters more today than ever.

In this guide, we break down—clearly and with examples—what Explainable AI is, what AI governance is, how they work together, and how beginners should decide where to start.

Featured Snippet (40–60 words)

Explainable AI uses techniques like SHAP or LIME to make model decisions understandable—it’s for debugging and transparency. AI governance defines policies, processes, and controls for building AI systems responsibly—it’s for organizational oversight. Start with explainability for quick wins; add governance for high-risk or regulated systems.

What Explainable AI Actually Is

A clear definition

Explainable AI refers to methods and tools that help humans understand how an AI model produced its output. It’s rooted in research areas like interpretability, transparency, and post-hoc explanation. The field expanded rapidly after initiatives such as the DARPA XAI program helped formalize approaches for complex models.

In practice, XAI tries to answer:

“How did the model reach this decision, and can we explain it in human terms?”

How explainability works

A few methods have become industry defaults:

Feature attribution — Techniques like SHAP and LIME show which input features contributed most to a prediction. Example: For a loan denial, the model might highlight “credit utilization” as the highest contributor.

Counterfactual explanations — These show how a prediction would change if certain inputs changed. “If income were $5,000 higher, this applicant would be approved.”

Attention visualization — Used heavily in transformer models; visualizes where the model “focused” during text or image processing.

Intrinsic interpretability — Simpler models (decision trees, linear models) provide explanations directly because of their structure.

None of these methods “open the black box” completely, but they give practical insight into model behavior.

What problem XAI solves

Explainable AI is designed for:

- Understanding model reasoning

- Debugging unexpected outputs

- Building trust with end-users

- Meeting transparency expectations under regulations

It answers “What did the model do?”, not “Should we have deployed this model?” or “Are we managing risk correctly?”

Real-world example

A credit scoring system uses SHAP to show customers why their loan was declined. This helps users understand the decision and regulators verify transparency requirements.

Limitations of explainable AI

XAI is powerful but not magic:

- Explanations can be unstable, changing based on small input tweaks

- Some methods provide approximate reasoning, not the model’s true internal logic

- Explanations alone don’t guarantee fairness

- Regulators often require more than technical transparency—such as documentation, monitoring, and human oversight

- XAI helps you understand decisions, not manage the entire lifecycle of the system

What AI Governance Actually Is

A clear definition

AI governance is the collection of policies, processes, documentation practices, and oversight structures used to manage AI systems responsibly. Definitions vary slightly across frameworks, but they share a core idea:

Governance is organizational control, not a single tool or technique.

Frameworks such as the NIST AI Risk Management Framework, the OECD AI Principles, and emerging standards like ISO/IEC 42001 all emphasize structured oversight, documentation, risk assessment, and accountability.

What governance includes

AI governance typically involves:

- Policies and guidelines for development and deployment

- Role definitions (who approves models, who monitors them)

- Documentation standards (data sheets, model cards, decision logs)

- Risk assessments and audits

- Ongoing monitoring and performance reviews

- Incident reporting or escalation processes

- Data lineage is part of good governance documentation

It’s closer to quality management or compliance than to model debugging.

What problem governance solves

Governance ensures:

- AI use aligns with organizational values

- Risks are identified and mitigated

- Decisions are documented and reviewable

- Teams remain accountable

- Compliance obligations are met when applicable

Where XAI answers the “how,” governance answers: “Are we building and deploying this system responsibly?”

Real-world example

A health-tech startup introduces a governance process requiring:

- A documented model approval workflow

- A standardized risk assessment

- Periodic model performance reviews

- Transparent documentation for clinicians

Explainability may feed into this process—but governance defines who does what, when, and how.

Limitations of governance

- Processes can become bureaucratic if not scoped correctly

- Governance maturity varies widely across companies

- It does not improve model accuracy or technical transparency by itself

- It may require cross-functional coordination that small teams find challenging

- Governance creates guardrails; it doesn’t fix the model’s internal logic

Explainable AI vs AI Governance: The Real Differences

Beginners often assume these concepts are interchangeable because both appear in conversations about “responsible AI.” But their scopes and functions differ dramatically.

Technical transparency vs organizational control

- Explainable AI is a technical property of the model.

- Governance is an organizational framework around the model.

One helps you understand predictions; the other helps you manage risk.

Comparison Table

| Aspect | Explainable AI | AI Governance |

|---|---|---|

| Primary focus | Understanding model decisions | Managing organizational risk |

| Level | Technical / model-level | Policy, process, and oversight |

| Tools | SHAP, LIME, counterfactuals | Frameworks, audits, documentation |

| Users | Data scientists, ML engineers | Compliance leads, PMs, leadership |

| Main benefit | Transparency & debugging | Accountability & responsible deployment |

| When to prioritize | Model debugging and validation | High-risk or regulated systems |

Where they overlap

Despite different scopes, the two intersect:

- Both support transparency

- Both can help satisfy regulatory expectations

- Both appear in “responsible AI” programs

- Both contribute to trustworthiness

- Explainability often becomes evidence—or input—within a governance framework

When you need both

Many industries now face environments where both technical transparency and governance oversight are expected. Examples include:

- Healthcare

- Finance

- Employment and hiring systems

- Sensitive biometric applications

In these contexts, explainability helps justify decisions; governance ensures decisions are documented, reviewed, and monitored.

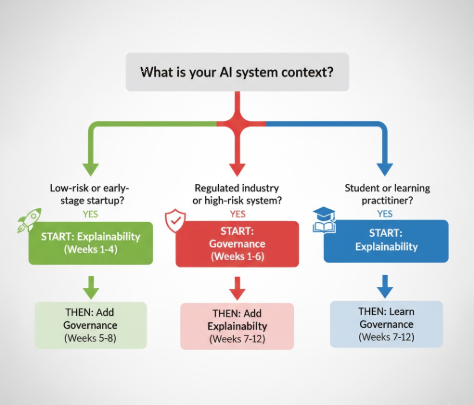

Which One Should Beginners Focus On? Your Decision Tree

The path forward depends on your context. Here’s how to decide:

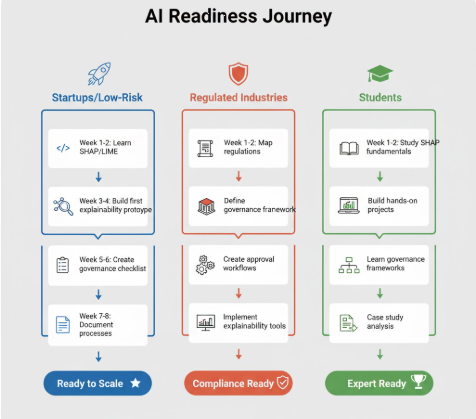

🟢 If you’re a small team or startup (low-risk systems)

START HERE: Explainability (Weeks 1-4)

- Use SHAP or LIME to debug model behavior

- Build trust with early customers by explaining decisions

- Cost: Minimal (tools are free; mainly engineering time)

THEN ADD: Lightweight governance (Weeks 5-8)

- Create simple decision logs and documentation templates

- Establish a basic model review checklist

- Define who approves models before deployment

Why this order: Speed matters when you’re validating product-market fit. Explainability gives immediate debugging value while you build governance processes.

🔴 If you’re in a regulated industry (high-risk systems)

START HERE: Governance (Weeks 1-6)

- Map your regulatory requirements (see table below)

- Define governance roles, approval workflows, and documentation standards

- Establish risk assessment processes

- Cost: Higher upfront (often requires consulting or internal resources)

THEN ADD: Explainability (Weeks 7-12)

- Implement SHAP, LIME, or counterfactuals as part of your risk assessment toolkit

- Use explainability to justify decisions in audit trails and compliance reports

- Document explanation methods in your governance framework

Why this order: Regulators expect documented processes first; explainability becomes evidence within that framework, not a substitute for it.

📚 If you’re a student or new practitioner

START HERE: Explainability (Weeks 1-6)

- Learn SHAP, LIME, and interpretability concepts deeply

- Build projects that showcase model understanding

- Experiment with different explanation techniques

THEN ADD: Governance concepts (Weeks 7-12)

- Study NIST AI Risk Management Framework

- Understand real-world governance practices through case studies

- Learn how teams apply governance at scale

Why this order: Understanding models deeply before learning governance frameworks makes both concepts stick.

If you’re building your AI foundation, check out our guide on AI vs Machine Learning vs Deep Learning

Regulatory Requirements by Industry

If you work in any of these sectors, governance comes first:

| Industry | Primary Regulations | Key Requirements | Timeline |

|---|---|---|---|

| Healthcare | HIPAA, FDA guidance on AI/ML | Risk assessment, documentation, human oversight, audit trails | Implement within 6 months |

| Finance | Fair lending laws, SEC guidelines | Model validation, bias testing, explainability for credit decisions | Implement within 3-6 months |

| Employment/Hiring | EEOC, state AI bias laws | Fairness audits, transparency to candidates, documentation | Implement immediately if using AI for screening |

| Biometrics/Privacy | GDPR, BIPA, state privacy laws | Data minimization, consent, transparency, right to explanation | Implement before deployment |

| General (EU) | EU AI Act | Risk classification, documentation, conformity assessment | Implement by 2026 |

Key question: If a regulator asked for your AI governance documentation today, could you produce it? If not, start here before explainability.

Pricing & Value (Conceptual)

Explainable AI tools (like SHAP or LIME) are open-source and free. Their cost is the compute time and expertise required to interpret results.

AI governance is less about purchasing software and more about:

- Adopting public frameworks (NIST, ISO/IEC 42001—free frameworks, but implementation consulting typically costs $50K–$500K+ depending on organization size)

- Defining processes and roles

- Documenting decisions

- Coordinating cross-functional teams

The “cost” is change management more than licensing.

Value depends on risk level:

- For low-risk systems, XAI alone may be enough.

- For high-risk systems, governance is non-optional. Skipping it risks regulatory fines, customer loss, or liability.

Who Should Use Each Approach?

Use Explainable AI if you are:

- An ML engineer

- A data scientist

- A product developer debugging or validating a model

- Someone building user-facing AI decisions

Use AI Governance if you are:

- A compliance lead

- A product manager overseeing deployment

- An AI program owner

- A team defining responsible AI policies

Use both if your system:

- Impacts access to credit, jobs, housing, healthcare, or other sensitive domains

- Is subject to regulatory scrutiny

- Requires human oversight

Explainability helps you understand the model. Governance helps you justify, document, and control how it’s used.

Conclusion

Explainable AI and AI governance are both essential—but they solve completely different problems. XAI tells you why a model made a specific decision. Governance tells you whether that model should be built, deployed, or monitored in a certain way.

Beginners often conflate the two because both appear in “responsible AI” discussions. But separating them clarifies priorities: start with transparency to understand models, then build governance to manage them responsibly.

As regulation evolves and organizations mature their AI practices, the combination of explainability and governance will become the baseline, not the advanced tier. The teams that understand the distinction early will build more trustworthy—and ultimately more resilient—AI systems.

Ready to build an AI career? Explore AI career paths for professionals

FAQ

1. Are explainability and AI governance the same thing?

No. Explainability is a technical method for interpreting model behavior. Governance is an organizational framework for managing risk and accountability.

2. When should I implement governance before explainability?

If your system is high-risk or in a regulated industry (healthcare, finance, hiring), governance comes first. Explainability becomes one component of your governance framework, not a substitute for it. See the regulatory requirements table above to identify your industry.

3. Is explainable AI required by law?

Some jurisdictions require transparency for certain high-risk AI systems, but requirements vary. The EU AI Act mandates transparency and explainability for high-risk systems. GDPR Article 22 requires information about automated decision-making for certain use cases. U.S. fair lending rules require explainability for credit denials. Explainability can support compliance, but it’s not always legally mandated on its own.

4. Can I use explainability tools without governance?

Yes. Many teams start with XAI for debugging. But for sensitive systems, governance ensures decisions are documented, reviewed, and monitored. If you’re in a regulated industry, skipping governance creates compliance risk.

5. Does governance guarantee fairness or accuracy?

No. Governance sets processes and controls; it does not fix model-level issues. Explainability, testing, and monitoring are still required. Governance is a guard rail, not a silver bullet.

6. What’s the easiest way for beginners to start?

For startups or low-risk systems: Begin with simple explainability tools (like SHAP or LIME), then add basic governance practices such as documentation templates and model review checkpoints.

For regulated industries or high-risk systems: Start with governance frameworks and documentation; add explainability as part of your compliance toolkit.

For students: Learn explainability first to understand models deeply, then study governance frameworks to understand how they’re applied in the real world.

Next Steps

- Beginners in low-risk domains: Download a free SHAP tutorial and try it on a sample dataset this week.

- Teams in regulated industries: Review your industry’s regulatory requirements in the table above; schedule a governance kickoff meeting.

- Students: Read the NIST AI Risk Management Framework overview to see how explainability and governance work together in practice.

Sources & References

Frameworks & Standards

- NIST AI Risk Management Framework – U.S. National Institute of Standards and Technology

- OECD AI Principles – OECD

- ISO/IEC 42001 – International Standards Organization

Explainability Tools & Research

- SHAP (GitHub) – SHapley Additive exPlanations

- LIME (GitHub) – Local Interpretable Model-agnostic Explanations

- DARPA XAI Program – Defense Advanced Research Projects Agency

Regulatory Requirements

- EU AI Act – European Commission

- GDPR Article 22 – General Data Protection Regulation

- HIPAA Compliance Guide – U.S. Department of Health & Human Services

- Fair Lending Compliance – Consumer Financial Protection Bureau

- EEOC AI Guidance – U.S. Equal Employment Opportunity Commission