Introduction

Chatbots handle over 85% of customer service interactions today. Most people have no idea how they work. You type a question and get an answer in milliseconds. That speed comes from three things happening at once: the system understanding what you want, searching for an answer, and generating a response. This article explains each step and why chatbots fail when you need them most.

Featured Snippet

A chatbot is software that simulates human conversation. Rule-based chatbots follow pre-written scripts. AI chatbots use natural language processing to understand what you actually mean, not just keywords. Both process input, search a knowledge base or generate responses, and deliver results in milliseconds. The difference: rule-based bots break when you phrase things unexpectedly. AI bots attempt to reason through unfamiliar requests.

Table of Contents

- What Exactly Is a Chatbot?

- The Two Main Types of Chatbots

- How Chatbots Work Behind the Scenes

- Key Technologies Powering Chatbots

- Real-World Applications Across Industries

- Why Chatbots Fail: Real Limitations

- The Future of Conversational AI

- FAQ

What Exactly Is a Chatbot?

A chatbot is a computer program designed to simulate conversation with humans. Instead of talking to a person, users type or speak to the bot, which replies. Unlike humans, chatbots operate 24/7 without breaks. They can manage thousands of simultaneous conversations because they run on cloud servers.

The core distinction lies in architecture. Rule-based chatbots follow decision trees. If a user types "hours," the bot retrieves pre-written information about business hours. AI-powered chatbots interpret what users mean regardless of exact wording. Ask "When do you close?" or "What time do you shut down?" and an AI chatbot understands both reference operating hours.

This distinction determines what tasks each type can handle. A rule-based bot fails when encountering input it wasn't programmed for. An AI bot attempts to reason through unfamiliar queries, though it may still misunderstand.

The Two Main Types of Chatbots

Rule-Based Chatbots

Rule-based systems operate on conditional logic. A programmer creates decision trees: "If user types X, show response Y." These bots excel at repetitive, predictable tasks because they follow exact scripts.

A bank bot handling balance inquiries works this way. User asks "What's my account balance?" The bot matches keywords, queries the account database via API, and returns the number. It works reliably for this narrow use case.

Limitations appear immediately when users deviate from expected inputs. Ask "How much money do I have?" instead of "What's my balance?" and a poorly trained rule-based bot may fail or return irrelevant responses. According to Zendesk research, 55% of businesses now use chatbots, but many still rely on rule-based systems that frustrate users when conversation moves beyond scripts.

AI-Powered Chatbots

AI chatbots use natural language processing and machine learning to understand intent and context. They don't rely on exact keyword matching. They parse sentence structure, identify what the user wants, and generate contextually appropriate responses.

A customer service chatbot handling product returns works this way. Whether users say "I want to return this," "Can I send this back?" or "This doesn't work, what do I do?" the bot recognizes the underlying intent and guides them through the process.

This flexibility comes with real costs. AI chatbots require significantly more training data and computational resources than rule-based systems. They also hallucinate. Research from MIT Technology Review found that advanced AI chatbots generate false information confidently. A chatbot might invent a product SKU or fabricate a policy because the underlying language model completed a pattern incorrectly. This is particularly dangerous in healthcare or financial contexts.

How Chatbots Work Behind the Scenes

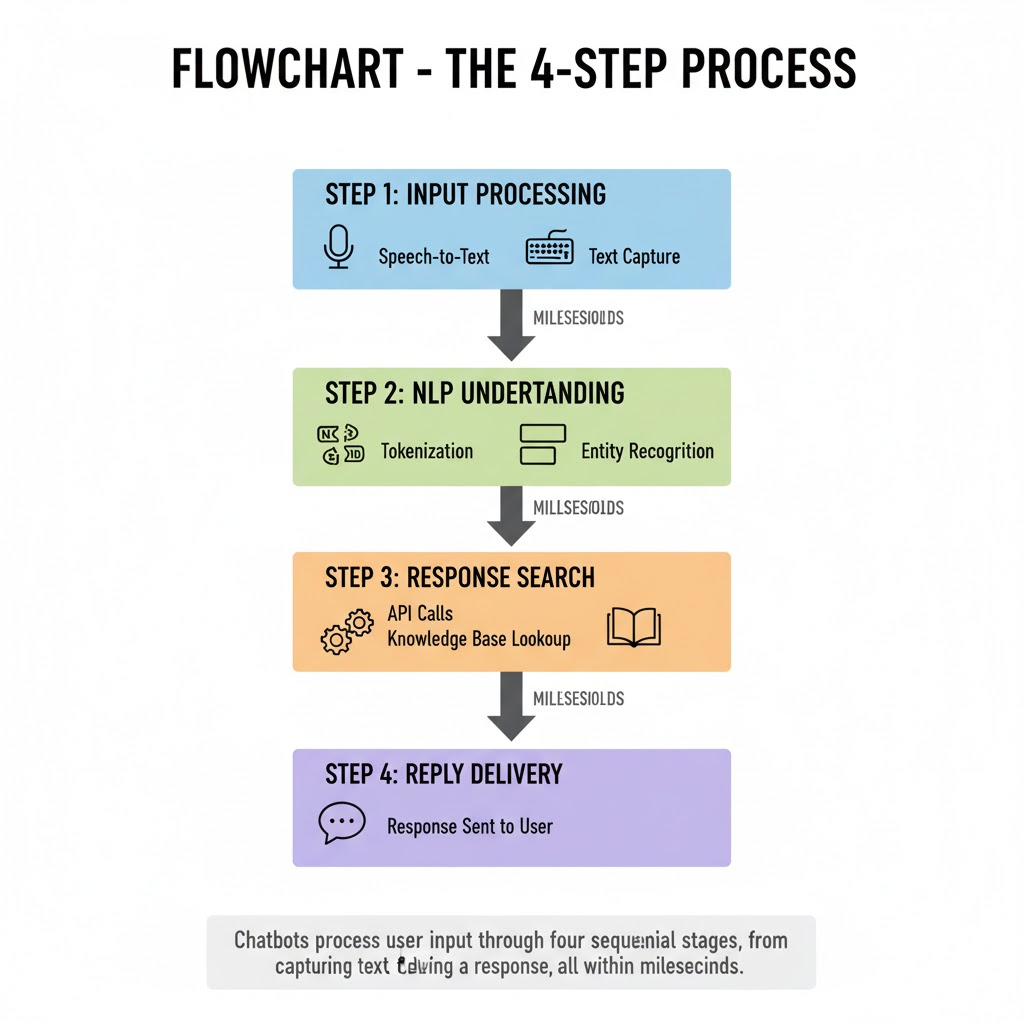

The process happens in four steps, all completed within milliseconds.

Step 1: Input Processing

When you type or speak, the chatbot first converts your input into readable data. For text input, the system captures what you typed. For voice input, speech-to-text technology converts audio into written text. This text becomes the data the chatbot analyzes.

Step 2: Understanding the Message

This is where natural language processing enters. The system breaks down your sentence into components: identifies key words, determines semantic meaning, recognizes intent from context.

If you type "Book me a flight to New York next Friday," the NLP system identifies: Action = "book," Object = "flight," Destination = "New York," Time = "next Friday." Rule-based bots would only catch keywords. NLP understands the relationships between them.

This matters because users rarely use identical phrasing. NLP handles variation. "Get me a ticket to NYC for next week" means the same thing, even though the words differ completely.

Step 3: Finding or Generating the Right Response

The chatbot now searches for an appropriate response. Rule-based bots look up answers in their programmed decision tree. AI chatbots search a knowledge base or use machine learning models to generate novel responses. Advanced systems pull real-time data through APIs (your account balance, flight availability, order status).

Step 4: Delivering the Reply

Finally, the response is sent back. Rule-based bots retrieve pre-written answers. Modern AI chatbots generate responses word-by-word using language models, constructing grammatically correct, contextually relevant replies in real time. The entire cycle happens in under 500 milliseconds.

Key Technologies Powering Chatbots

Natural Language Processing (NLP)

NLP is the foundational technology that allows chatbots to interpret human language. Without NLP, systems would only recognize exact keywords. With it, they understand intent, context, and even ambiguity.

NLP handles multiple tasks: tokenization (breaking text into words), entity recognition (identifying names, dates, locations), sentiment analysis (detecting tone), and semantic understanding (grasping meaning). Modern NLP uses transformer-based models like BERT or GPT architectures that analyse relationships between words across entire sentences. For a deeper dive into how language processing works, see our guide to natural language processing.

The limitation: NLP works best in English with standard syntax. Slang, regional dialects, and non-English languages trip up most systems. Typos confuse many bots. Forrester research found that 60% of user frustrations with chatbots stem from the bot's inability to understand what the user actually wanted, even when the user's language was clear.

Machine Learning (ML)

Machine learning allows chatbots to improve from experience. Every conversation is logged. Patterns in user behaviour and successful responses are identified. The system retrains on this data, improving future responses. To understand how machine learning powers modern AI systems, read our explainer on AI, machine learning, and deep learning.

ML models identify statistical patterns in language. If thousands of users ask "What's your return policy?" in slightly different ways, the ML system learns these variations all point to the same intent. Future phrasings are recognized faster.

The catch: ML requires massive amounts of training data. A poorly trained system produces worse results than a simple rule-based bot because it confidently gives wrong answers instead of declining to respond.

Application Programming Interfaces (APIs)

APIs connect chatbots to external systems. When you ask a banking bot "What's my balance?" the chatbot doesn't store your account data. It uses an API to request information from your bank's database, retrieves the current balance, and presents it to you.

APIs enable chatbots to access real-time information: flight availability, order status, inventory, weather, or customer records. Without APIs, chatbots would only reference static, pre-loaded information.

Cloud Computing

Chatbots run on cloud servers, which is why they handle thousands of simultaneous conversations. Cloud infrastructure provides scalable processing power. When traffic spikes, the system automatically allocates more computing resources.

This scalability is cost-effective. Instead of building expensive on-premise servers that sit idle during low-traffic periods, companies pay only for computing power they actually use.

Real-World Applications Across Industries

Customer Support

Chatbots now handle initial support interactions for most major companies. They answer FAQs instantly, reducing wait times from hours to seconds. According to Zendesk research, 55% of businesses use chatbots for customer support, with automation handling routine inquiries so human agents focus on complex issues.

A customer service bot for an e-commerce site might handle order tracking, returns, and refund status before escalating to a human if needed.

E-Commerce

Retail chatbots serve multiple functions. They recommend products based on browsing history ("You viewed winter jackets, check out our thermal collection"). They track shipments and process simple returns. Some advanced bots handle product recommendations with 40% better accuracy than traditional recommendation engines.

Healthcare

Healthcare chatbots schedule appointments, send medication reminders, and provide preliminary symptom assessment. During the COVID-19 pandemic, chatbots screened patients for symptoms before they contacted healthcare providers, reducing unnecessary clinic visits.

Banking and Financial Services

Banking chatbots check account balances, transfer funds, flag suspicious transactions, and answer policy questions. They operate 24/7, essential for international banking where human hours don't cover all time zones. Advanced banking AI also handles fraud detection in real-time, which we cover in detail in our article on AI fraud detection in banking.

Education

Educational institutions deploy chatbots as virtual tutors. They answer student questions about course material, help with registration, and provide information about programs and deadlines.

Why Chatbots Fail: Real Limitations

Despite advances, chatbots have consistent failure patterns documented in user reviews and research.

Limited Understanding of Context

Chatbots struggle with nuance. If you ask "That didn't work" after a failed solution, does "that" refer to the product, the advice, or the chatbot's previous suggestion? Humans resolve this instantly. Chatbots frequently misinterpret. Research from Forrester shows that 60% of user frustrations with chatbots stem from the bot's inability to understand what the user wanted, even when the user's language was clear.

Escalation Frustration

Users report high frustration when chatbots can't escalate to humans. Being forced to repeat your issue to a human agent after a bot mishandles it creates negative experiences. Many companies still lack seamless handoff systems. Users restart conversations entirely rather than smoothly transferring to a person.

Language and Cultural Barriers

Natural language processing works best in English with standard syntax. Slang, regional dialects, and non-English languages trip up most systems. Typos confuse many bots. Cultural references and idioms often fail completely.

Security and Privacy Concerns

Chatbots that handle sensitive data (credit cards, health information, social security numbers) must maintain strict security protocols. Data breaches involving chatbots have exposed customer information. Additionally, poorly secured chatbots inadvertently reveal confidential information if customers ask the right questions.

Hallucination in AI Models

Advanced AI chatbots sometimes hallucinate. They generate false information confidently. MIT Technology Review documented cases where chatbots invented product SKUs and fabricated policies because the underlying language model completed a pattern incorrectly. This is particularly dangerous in healthcare or financial contexts where accuracy is critical.

The Future of Conversational AI

Multimodal Conversations

Future chatbots will integrate text, voice, images, and video. Instead of typing questions, users will show a product image and say "Can I return this?" The chatbot will process the image, understand spoken intent, and respond verbally.

Emotional Intelligence

Chatbots are gaining sentiment detection capabilities. Advanced systems recognize frustration in tone and adjust responses. Rather than continuing automated troubleshooting when a user is already upset, they escalate to a human agent immediately.

Personalization at Scale

Chatbots will learn individual preferences. A regular customer's bot experience will adapt based on past interactions. Each user receives tailored recommendations and support rather than generic responses.

Voice-First Interfaces

Voice assistants (Alexa, Google Assistant, Siri) are merging with chatbot technology. Conversational AI through voice will become as common as text-based chat, especially for hands-free interactions in cars, smart homes, and wearables.

FAQ

Q: How much does it cost to build a chatbot?

A: Rule-based chatbots cost $5,000 to $50,000 to build, depending on complexity. AI-powered chatbots range from $50,000 to $500,000 or more because they require extensive training data, NLP expertise, and ongoing refinement. Cloud hosting adds $100 to $5,000 monthly depending on traffic.

Q: Can chatbots replace human customer service agents?

A: No. Chatbots handle routine inquiries (60 to 70% of support tickets) effectively, but complex issues require human judgment, empathy, and decision-making. The trend is hybrid: bots handle triage and escalate complex cases to humans.

Q: How accurate are chatbots at understanding questions?

A: Modern AI chatbots understand user intent correctly 70 to 85% of the time for common queries. For edge cases or unclear phrasing, accuracy drops significantly. This is why escalation to humans remains essential.

Q: What's the difference between a chatbot and a voice assistant?

A: Voice assistants like Alexa and Google Assistant perform broader functions: set reminders, control smart home devices, play music. Chatbots typically focus on specific tasks like customer support or product recommendations. The line is blurring as technology advances.

Q: How do chatbots learn over time?

A: Through machine learning. Each conversation is logged. Patterns in user behavior and successful responses are identified. The system retrains on this data, improving future responses. Some systems require explicit human feedback ("Was this response helpful?") to accelerate learning.

Sources

Zendesk. "The State of Customer Service in 2025." Zendesk Research. Accessed November 2025.

Forrester. "The State of Conversational AI 2024." Forrester Research. 2024.

MIT Technology Review. "Why AI Chatbots Still Hallucinate." MIT Technology Review. March 2024.

Google Cloud. "Introduction to Natural Language Processing." Google Cloud Documentation. Accessed November 2025.

Amazon AWS. "What is a Chatbot?" AWS Documentation. Accessed November 2025.

McKinsey & Company. "The Economic Potential of Generative AI." McKinsey Report. June 2024.