By August 2026, the European Union's AI Act goes live. Three months before that, Illinois and Colorado flip the switch on their own rules. What most people don't realize: this isn't a distant policy debate. It's a hard deadline that's already reshaping how companies build AI.

The real story isn't that regulations are coming. It's that they're fragmenting. The EU imposes risk-based classifications. Colorado demands impact assessments. Illinois focuses on hiring bias. Companies now face a compliance matrix with no clear winner, and the cost of getting it wrong ranges from fines to product shutdowns.

Before diving into what changes, it helps to know how these systems actually work. Our guide to recommendation algorithms explains the exact technology regulators are now scrutinizing. If you're new to AI entirely, start here.

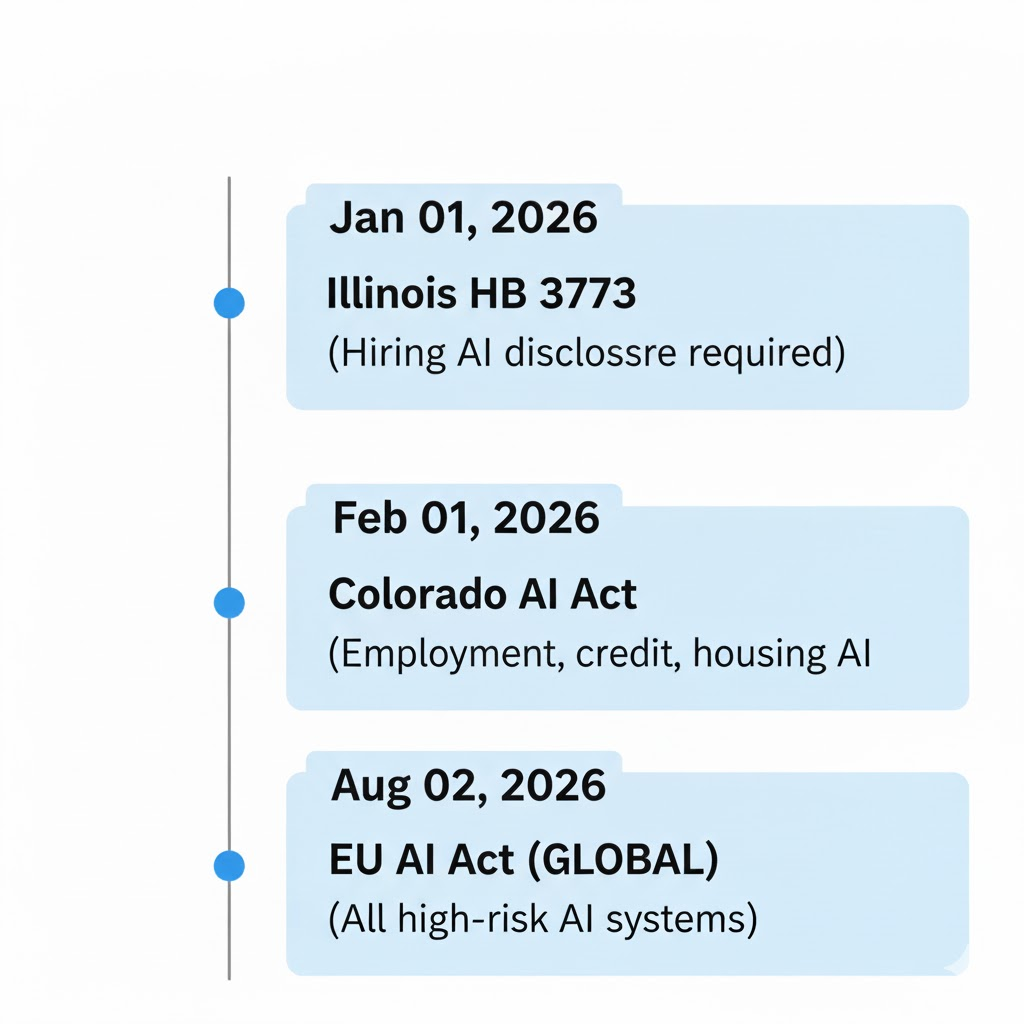

Key regulatory deadlines: Illinois HB 3773 (Jan 1, 2026), Colorado AI Act (Feb 1, 2026), EU AI Act (Aug 2, 2026)

This guide breaks down what actually changes, who gets hurt, and who might accidentally benefit.

Table of Contents

- What's Actually Happening in 2026

- The EU AI Act: Risk-Based Regulation Explained

- The U.S. Patchwork: Colorado, Illinois, and the State Wars

- How Companies Will Actually Feel This

- Who Wins and Who Loses Under These Rules

- Real Limitations of the Regulatory Approach

- Pricing and Compliance Costs

- FAQ

What's Actually Happening in 2026

Here's the practical answer: Three major regulatory frameworks go live between January and August 2026. The EU's AI Act becomes enforceable across all 27 member states. Illinois requires hiring AI systems to notify applicants and explain how they're evaluated. Colorado mandates risk assessments for high-impact AI systems used in employment, credit, housing, and insurance.

On the surface, this sounds straightforward. In practice, it creates chaos. A chatbot deployed in the EU faces different rules than the same chatbot in Colorado. A hiring tool that complies with Illinois law might violate EU transparency requirements. National companies now need compliance teams that understand three distinct legal frameworks with overlapping jurisdictions and conflicting requirements.

The stakes are real. The EU AI Act permits fines up to 6% of global revenue for the highest violations. That's potentially billions for a company like Meta. Colorado and Illinois penalties are lower but still material (thousands to tens of thousands per violation).

What makes this genuinely risky is that regulation is moving faster than most companies are preparing. A 2024 survey from the Center for AI Safety found that only 34% of companies had even started compliance planning for the EU AI Act, despite the August 2026 deadline being known for over a year.

The EU AI Act: Risk-Based Regulation Explained

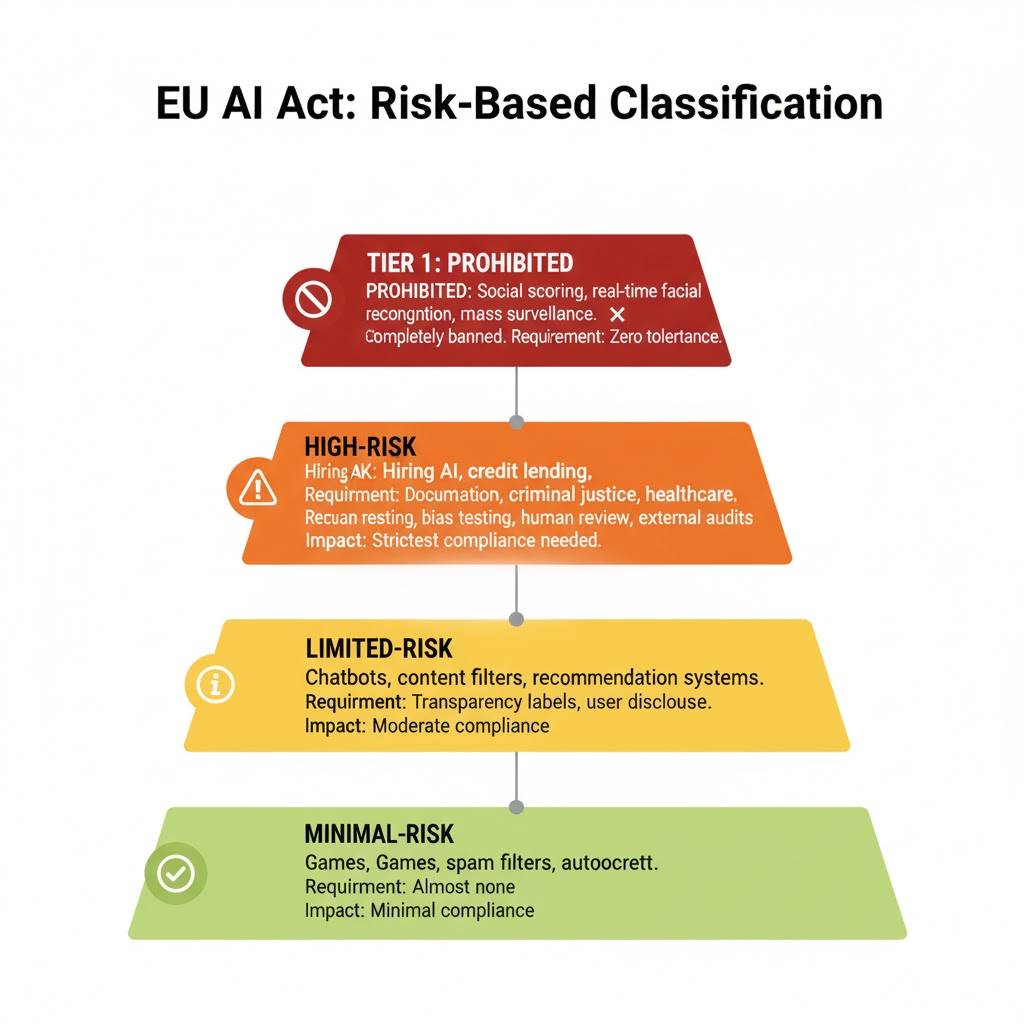

The EU AI Act sorts AI systems into four risk categories. This is the closest thing to a global standard we have, which matters because companies using EU-compliant AI often apply those same standards globally.

Prohibited and High-Risk AI Categories

Prohibited AI gets banned outright. This includes systems designed for social scoring (ranking people's creditworthiness based on opaque behavioral data), real-time biometric identification for mass surveillance, and manipulative practices designed to circumvent human decision-making. The reasoning is pragmatic: Europe viewed these categories as having no legitimate use case where the risks could be mitigated.

High risk AI covers systems in critical decision-making domains: hiring, credit lending, criminal justice, healthcare, and welfare benefits. These systems need documentation of training data, records of bias mitigation, human review mechanisms, and external audits. Importantly, the law requires that humans remain in the loop for final decisions in these areas. Automated systems can screen candidates or flag credit risks, but a human must make the final call.

The compliance burden here is substantial. You need detailed records showing what data you trained on, what you did to reduce bias, and how you tested it before launch. Large tech companies already do this. Smaller companies or startups using off the shelf AI models? They're scrambling because they don't have this infrastructure.

Limited and Minimal-Risk Categories

Limited risk AI includes chatbots and content recommendation systems. The requirement is transparency: users need to know they're interacting with AI. If you're using an AI chatbot for customer service, you must disclose that fact. If you're recommending content algorithmically, users should understand that's happening.

Minimal risk AI covers everything else (games using AI, basic spam filters, autocorrect). These face almost no requirements.

The practical problem: classifying AI correctly is harder than it sounds. Is a recommendation system used for job postings high risk or limited risk? The law says high-risk if it significantly impacts employment decisions. But what counts as "significant"? EU regulators are still working out enforcement details.

This is where it gets messy. There's no clear line. A recommendation system might rank candidates. Does that count as "significantly impacting" hiring? Nobody knows yet. Companies are guessing. If you want to understand the technical side of how these systems make decisions, this breakdown of chatbots and how data gets tracked show why regulators care.

Real companies are already feeling this. LinkedIn reported in early 2025 that they're rebuilding their recommendation engine to comply with the transparency requirements, essentially forcing them to make recommendations less effective because they need to explain how they work. That's a competitive disadvantage compared to non-EU platforms operating without these constraints.

Risk-based classification determines compliance requirements: prohibited systems face total bans, high-risk demands extensive documentation and human review

The U.S. Patchwork: Colorado, Illinois, and the State Wars

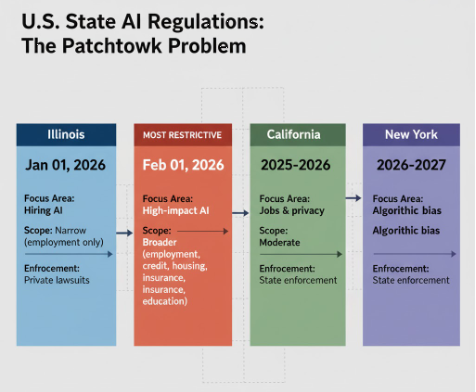

The United States has no federal AI regulation. Instead, states are moving independently, creating a compliance nightmare for any company operating nationally.

Illinois Hiring AI Regulation

Illinois HB 3773 takes effect January 1, 2026. It applies specifically to AI used in hiring decisions. The law requires employers to notify applicants that AI is screening them, disclose how the AI evaluates candidates, and take steps to ensure the system doesn't discriminate. There's no prior approval process companies can deploy now, but they need to be able to document compliance if challenged.

The enforcement mechanism is private litigation. If someone can show they were discriminated against by an AI system and the company didn't disclose it, they can sue. That creates incentive to over disclose rather than risk lawsuits. Some companies are now adding notices to job applications saying "an AI system may be used in screening" even when it's not, simply to avoid liability.

Colorado's Broader Approach

Colorado's AI Act launches February 1, 2026, and it's broader. It covers AI used in employment, credit decisions, housing, insurance, and education. Companies need to conduct impact assessments before deploying high-impact AI, maintain records of those assessments, and give users the ability to request explanations for decisions that affect them.

The key difference from Illinois: Colorado gives users the right to opt out of automated decision-making and request human review. That sounds consumer-friendly until you think through the logistics. If someone requests human review of a credit decision made by AI, someone has to manually review it. That's expensive, and it scales poorly. A company might suddenly face thousands of review requests that blow through their compliance budget.

The Fragmentation Problem

Other states are moving. California is finalizing rules. New York is developing regulations on algorithmic bias. Washington is preparing its own framework. This fragmentation means a company could be compliant in Colorado, partially compliant in California, and violating New York standards (simultaneously with the same AI system).

The practical outcome: companies are choosing which states to prioritize. Some are restricting AI use in high-regulation states and using it more liberally elsewhere. That's not really compliance (it's regulatory arbitrage). It works until federal law arrives, which feels inevitable.

Fragmented state regulations create compliance complexity: Illinois focuses on hiring (Jan 2026), Colorado covers broader high-impact systems (Feb 2026), other states follow different approaches

How Companies Will Actually Feel This

Product and Engineering Impact

For product teams: High-risk AI systems need redesign. Hiring algorithms that currently score candidates automatically now need human review steps. Recommendation systems that optimize purely for engagement now need to explain why they're recommending something. That's not a small change. It often means slower products, lower engagement metrics, and reduced business value.

A finance company testing a credit decisioning AI told us in late 2024 that adding explainability actually reduced the accuracy of their model. It's a trade-off: humans can understand a model that explains its reasoning, but that model is sometimes less predictive than a black-box model that humans couldn't interpret anyway. Companies are now choosing between better performance and regulatory compliance (and losing on both fronts).

For engineering teams: Documentation becomes the new battleground. Every high-risk AI system needs a paper trail: training data lineage, bias testing results, audit reports, human review logs. For teams accustomed to shipping fast and iterating, this is a culture shock. A model update that used to take days now requires documentation updates, bias retesting, and compliance signoff.

New Job Categories and Skills Gap

For compliance and legal: New roles are emerging. AI Compliance Officers, Ethics Specialists, and Explainability Engineers are now job postings at major tech companies. But there's a shortage. According to LinkedIn's job market data from 2024, AI compliance and governance roles were the fastest-growing segment in tech hiring, with demand outpacing supply. That means companies are bidding up salaries for people who understand both AI and regulation. Interested? Look at AI career paths and what AI ethics actually involves to see if this is your lane.

For hiring teams: AI used in recruiting needs transparency. Some companies are now labeling job postings as "screened by AI" or providing candidates with explanations of why they were rejected. This creates friction in recruiting. Candidates are more likely to dispute rejections if they know an algorithm made the call. That means more manual review, longer hiring cycles, and higher operational costs.

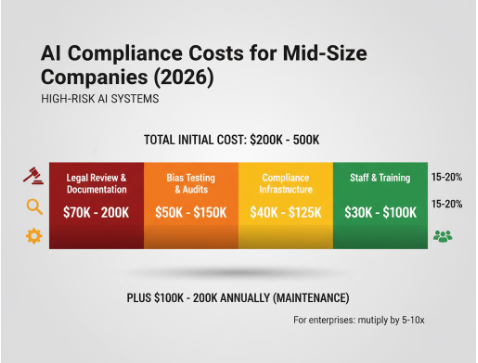

Initial compliance costs for mid-size companies: $200K-$500K for legal review, bias testing, documentation infrastructure, and dedicated staff

Who Wins and Who Loses Under These Rules

Losers: Startups with limited compliance infrastructure. A startup with five engineers and a million-dollar budget can't absorb the cost of compliance teams, legal expertise, and documentation infrastructure. They're competing against giants who can. The result: consolidation. Smaller AI companies either get acquired by bigger players who can handle compliance, or they exit the market.

Losers: Companies relying on opaque performance. Recommendation engines, pricing algorithms, and content moderation systems that work well but nobody (including the company) fully understands are now illegal in the EU and restricted in Colorado. These companies need to rebuild their systems around interpretability, often at the cost of performance.

Winners: RegTech startups. Companies building compliance tools are growing fast. Platforms like Credo AI and Holistic AI, which automate bias detection, model documentation, and audit trails, are filling a real need. They're essentially selling peace of mind to companies that don't want to build this infrastructure themselves.

Winners: Established tech companies with existing compliance infrastructure. Google, Microsoft, and Amazon already have legal teams, documentation practices, and governance frameworks. They can adapt faster than competitors. The regulatory overhead that kills a startup barely registers.

Winners: Europe's tech industry. This is counterintuitive, but restrictive regulations often favor incumbents. By making it expensive and complex to build AI, the EU is making it harder for American and Chinese companies to compete in European markets. That creates space for European AI companies to build defensible positions without facing overwhelming competition.

Losers: Consumers in high-regulation jurisdictions. The systems you interact with will be slower, less personalized, and sometimes less effective. Your recommendations will be less accurate because the algorithm has to explain itself. Your hiring chances might be lower because companies are risk-averse about AI. Your credit decisions might take longer because human review steps are now required.

Real Limitations of the Regulatory Approach

Here's where regulation shows its cracks: the rules assume explainability and fairness are always possible. They're not.

Some AI systems are genuinely hard to explain. A deep neural network with billions of parameters that makes a healthcare diagnosis is making decisions based on patterns humans can't fully interpret. You can add explainability layers on top, but you're explaining the explanation, not the actual decision. This is called the "accuracy-interpretability tradeoff," and it's not solved. Companies are choosing between building systems that work and systems that can be explained. Regulators haven't acknowledged this trade-off exists.

The fairness question is even messier. The law requires that AI systems don't discriminate. But discrimination in hiring, lending, and housing is deeply embedded in historical data. An AI trained on historical hiring decisions will learn to discriminate because humans did. Removing bias requires either using different data (which might not exist), actively favoring underrepresented groups (which regulators sometimes call illegal reverse discrimination), or accepting that perfect fairness is impossible.

Real companies are struggling with this. A major bank told us they audited their lending AI and found it gave worse terms to certain demographics. They "fixed" it by removing demographic data as an input. But the AI then proxied those demographics using other variables zip codes, credit card usage patterns, employment history. They're back where they started, just with an extra layer of obfuscation.

The regulatory approach also assumes enforcement will be consistent. It won't. The EU will enforce rigorously. Illinois will enforce through private litigation, which means enforcement depends on whether a well-funded plaintiff sues. Colorado's enforcement is still being worked out. This inconsistency creates arbitrage opportunities companies optimize for the jurisdiction with the loosest enforcement, and that becomes the de facto standard.

There's also a real risk of regulatory capture. Once these laws are written, the companies that can afford to influence them will. We've already seen this starting: large tech companies are consulting with regulators in Europe, helping shape implementation guidance. That means regulations will likely become friendlier to incumbents over time, making it even harder for new entrants.

Pricing and Compliance Costs

Here's what compliance actually costs:

A mid-size company deploying a high-risk AI system in the EU is looking at 200K to 500K in initial compliance costs. That includes legal review, bias testing, documentation infrastructure, and staff time. Annual maintenance is another 100K to 200K. For startups, this is often a company-ending expense. For established companies, it's a line item they absorb.

A large enterprise doing this across multiple systems might spend millions. Google and Microsoft are rumored to have compliance budgets in the tens of millions across all their AI products, though neither company publicly discloses these numbers.

The hidden cost is opportunity cost. Engineering resources spent on compliance documentation don't get spent on product development. Companies are shipping slower and innovating more cautiously. That affects the pace of AI development overall. From a business perspective, that's not necessarily bad it means fewer AI-driven disasters. From a technology perspective, it means the rate of advancement slows down.

FAQ

Q: Does the EU AI Act apply to non-EU companies?

A: Yes, if your AI system is used by EU residents or affects EU citizens, the law applies. So even if you're based in the U.S., if your product reaches EU users, you need to comply. This is why the EU's regulations effectively become global standards companies comply with EU rules everywhere to avoid managing different versions.

Q: What happens if I don't comply?

A: For the EU AI Act, fines up to 6% of global annual revenue for high violations, or 4% for lower violations. For Colorado and Illinois, it depends on enforcement. Illinois uses private litigation—someone has to sue you. Colorado uses state attorney general enforcement. The point: ignoring compliance is a business risk, not just a legal issue.

Q: Can I use AI without complying?

A: In low-risk areas, yes. A video game using AI for difficulty adjustment faces minimal requirements. A hiring system faces substantial requirements. The law is categorically risk-based, so you can still use AI you just need to understand what category your use case falls into and meet those requirements.

Q: Is there a grace period after August 2026?

A: No hard grace period, but enforcement will likely be gradual. Regulators will focus on the most egregious violations first. That said, companies should not assume they have time to fix things after the deadline. The law is enforceable immediately.

Q: Will the U.S. pass federal AI regulation?

A: Almost certainly, eventually. But not before August 2026. When it does, it will likely be somewhere between the EU's strict approach and the current fragmented state approach. The smart move is to design for EU compliance now if you can clear that bar, U.S. regulations will feel modest by comparison.

Sources:

- EU AI Act Official Text - The complete regulatory text, risk classifications, enforcement mechanisms, and timeline

- Illinois HB 3773 Bill Text - Specific requirements for AI hiring systems, disclosure obligations, and private right of action

- Colorado HB24-1142 Artificial Intelligence Transparency and Accountability - Impact assessment requirements, user rights, and enforcement mechanisms

- Center for AI Safety: 2024 Corporate AI Governance Survey - Compliance preparation data showing only 34% of companies started planning for EU AI Act

- LinkedIn Jobs Report: AI Governance Roles Growth - Fastest-growing job categories in tech, compliance hiring surge

- Brookings Institution: The Accuracy-Interpretability Tradeoff in Machine Learning - Analysis of why explainability reduces model performance in certain use cases